Why This Shift Matters Now

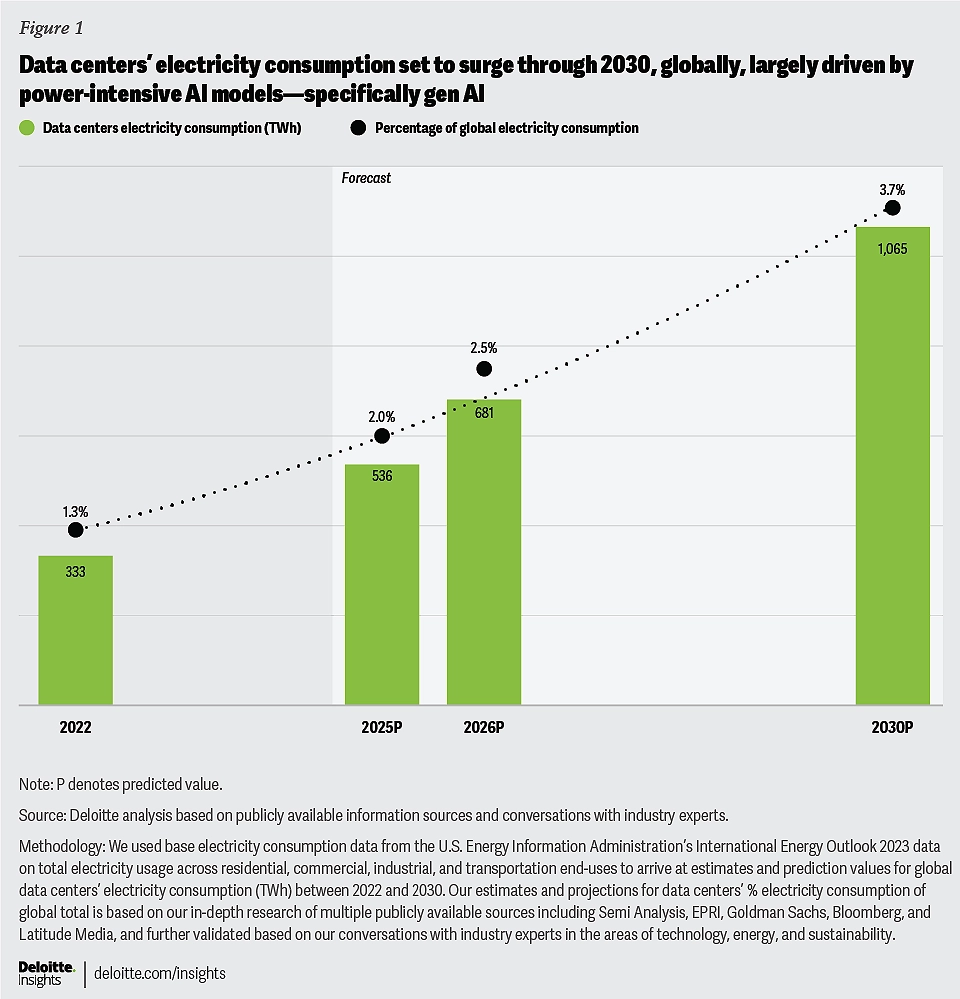

The International Energy Agency estimates global spending on data centers will reach about $580 billion this year, eclipsing roughly $540 billion for new oil supply projects. At the same time, AI data-center electricity use is projected to grow fivefold by 2030, effectively doubling today’s total data-center power draw. Capital is pivoting to digital infrastructure, but the bottleneck isn’t dollars-it’s power, location, and time-to-interconnect. For AI and infrastructure leaders, this changes how you plan capacity, procure energy, and manage risk over the next 24-36 months.

Key Takeaways

- Investment crossover: Data centers (~$580B) are now outpacing new oil supply (~$540B), signaling durable investor appetite for AI and cloud infrastructure.

- Power reality: AI-related data-center electricity could 5x by 2030, pushing total data-center load to roughly 2x today’s level; interconnection lead times are often 24-60 months.

- Geographic pressure: Most growth concentrates in the US, Europe, and China-where grid constraints, permitting, and public acceptance are tightest.

- Energy mix: Renewables are expected to supply most incremental data-center electricity by around 2035, but near-term builds will still lean on gas, storage, and grid upgrades.

- Governance: Expect tougher efficiency, carbon, and water reporting requirements; procurement and siting decisions will face more scrutiny.

Breaking Down the Announcement

IEA’s callout is less about hype and more about constraints. The next wave of AI sites is not 5-10 MW with 10 kW/rack; it’s 50–150 MW campuses with dense GPU racks exceeding 50–100 kW each and power usage effectiveness (PUE) targets near 1.1–1.2. That scale stresses everything: high-voltage transformers (with 12–30 month lead times), switchgear, transmission capacity, and water availability for cooling. In many US and EU markets, data-center interconnection queues stretch years; even with process reforms, substation buildouts and right-of-way work rarely move faster than civil works allow.

The cost math is sobering. A 70 MW continuous AI campus consumes ~613 GWh/year. At $0.09/kWh, that’s ~$55 million in annual energy cost before demand charges or hedging. If power rises to $0.12/kWh or you operate in a capacity-constrained ISO, your run-rate can swing by tens of millions. That volatility is why developers are pursuing long-term power purchase agreements (PPAs), behind-the-meter renewables, and on-site storage to shave peaks and firm supply.

What This Changes for Operators and Buyers

For hyperscalers, the signal is to double down on brownfield and “grid-adjacent” strategies-repurposing retired thermal plants, clustering near large substations, and integrating battery storage to reduce interconnection delays. For enterprises and AI startups, the takeaway is to treat power as a first-class constraint alongside GPUs and networking. Siting choices increasingly hinge on interconnect feasibility, water risk, and regulatory posture, not just real estate and tax incentives.

Expect a shift from generic capacity to “power-aware compute.” Practical steps include workload scheduling to align inference and non-urgent training with renewable and off-peak windows, tiering models by efficiency (e.g., smaller distilled models for high-QPS inference), and adopting liquid cooling to improve density and reduce fan power. Each move compounds: a modest PUE improvement and smarter scheduling can free multiple megawatts at campus scale.

Industry Context and Competitive Angle

Why now? Three forces coincide: GPU supply is loosening, inference traffic is climbing faster than training, and capital markets reward AI capacity with premium multiples. Yet grid physics and permitting don’t scale at the same pace. That gap creates opportunity for operators with energy expertise: colocation providers that can secure power sooner will command higher margins; cloud regions with available capacity will gain share; and chip and system vendors that reduce per-token energy will win TCO battles.

Alternatives have trade-offs. On-prem AI clusters offer control but face the same interconnection and equipment delays—and lack hyperscale buying power for energy. Cloud gives speed but can expose you to variable pricing and region-level capacity constraints. Colocation can bridge the gap if the provider has real, near-term megawatts and a credible energy strategy (firmed renewables, storage, and grid relationships). Small modular reactors are discussed as a future option, but timelines and regulatory paths remain uncertain; treat nuclear as a 2030s hedge, not a 2025 plan.

Risks to Model and Mitigate

- Interconnection delays: 24–60 months is common; prioritize sites with existing transmission capacity or fast-track utility programs.

- Supply chain: Transformers, HV cables, switchgear, and liquid-cooling components remain bottlenecked; pre-order long-lead items.

- Water constraints: Evaporative systems can use ~1.5–2.0 liters per kWh; a 70 MW site can exceed 1 billion liters/year—plan for dry or hybrid cooling where needed.

- Carbon and compliance: 24/7 carbon-free energy commitments, scope 2 accounting, and EU disclosure rules will shape siting and procurement.

- Stranded-asset risk: Rapid efficiency gains or policy shifts can change the economics; keep campuses modular with flexible power blocks.

Operator’s Playbook: What to Do Next

- Lock power first: Make site selection contingent on a binding path to megawatts—substation capacity, transformer delivery schedule, and an interconnect timeline you control.

- De-risk energy costs: Blend physical and virtual PPAs, add storage for peak shaving, and target locations where renewables plus firming can meet 24/7 load by mid-2030s.

- Engineer for efficiency: Commit to liquid cooling, PUE ≤1.2, and workload scheduling that shifts non-urgent jobs into low-carbon, off-peak windows.

- Harden governance: Stand up cross-functional reviews for siting, water, and carbon; publish measurable targets to preempt regulatory friction and community pushback.

Bottom line: Money is no longer the limiting reagent—power and time are. Teams that treat energy as a strategic input, not a utility bill, will capture the upside of the AI buildout while others wait in the queue.

Leave a Reply