Why this launch matters

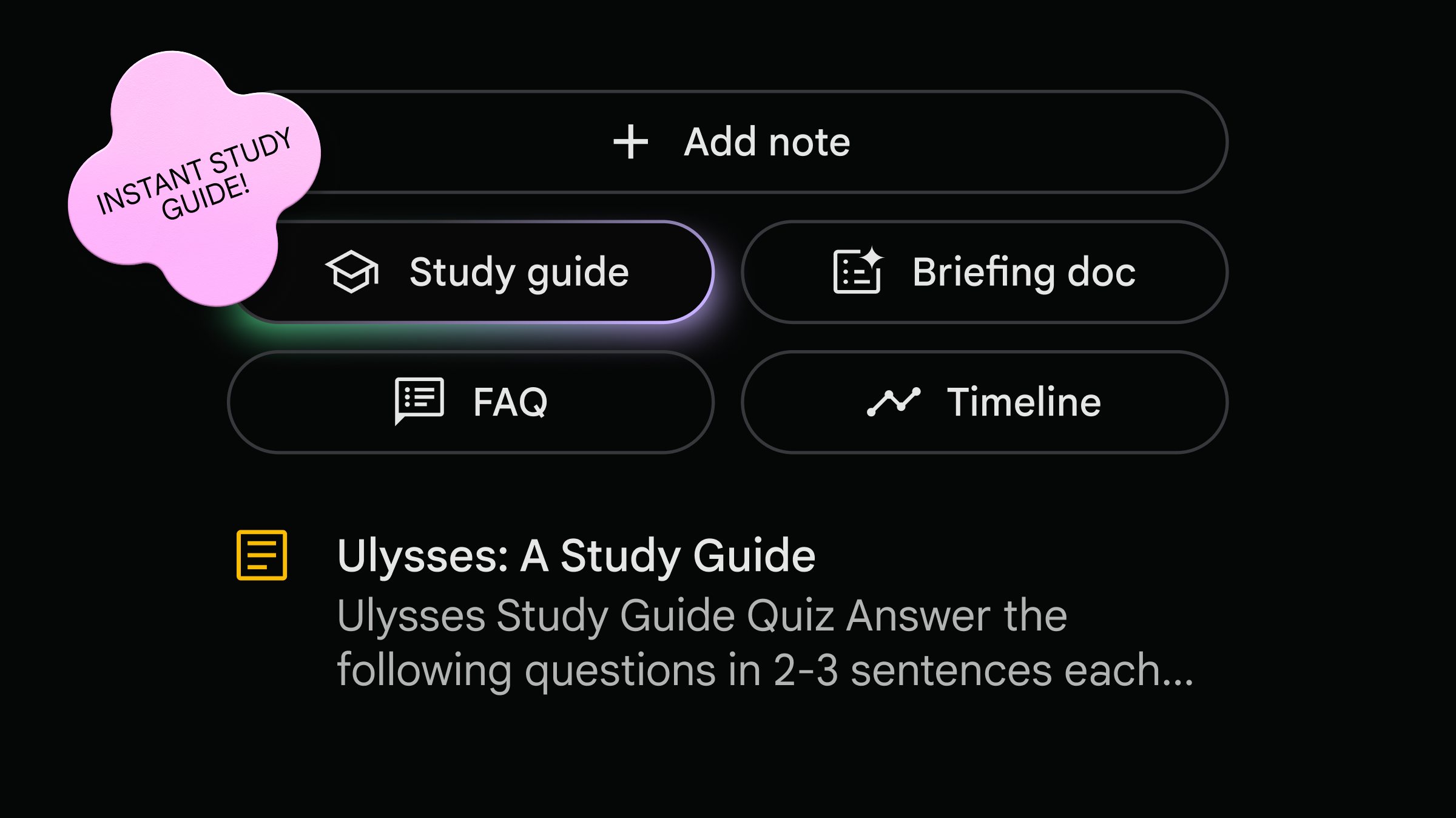

Google has turned NotebookLM from a source‑grounded summarizer into an automated researcher. The new Deep Research mode plans and executes web‑based investigations, synthesizes findings with citations, and even recommends follow‑on sources. Alongside it, Fast Research offers quicker queries, and Google added support for Google Sheets, Drive URLs/PDFs, and Word document uploads-closing key workflow gaps for enterprise users.

For operators, this shifts NotebookLM from “help me summarize these PDFs” to “produce a decision‑ready brief using my documents plus the public web.” If the quality holds, expect hours of analyst work to compress into minutes and for research to become repeatable, auditable workflows rather than ad‑hoc chat sessions.

Key takeaways

- Deep Research automates multi‑step web research and writes source‑cited reports; Fast Research offers quick, lightweight scans.

- Expanded inputs (Sheets, Drive URLs/PDFs, Word) reduce “swivel‑chair” effort and improve cross‑file synthesis inside Google workflows.

- Launch claims: supports 20+ file types and up to ~100 files per notebook; typical 10‑page outputs in a few minutes. Validate with your content.

- Enterprise knobs exist (Workspace integration, audit logging, access controls), but two gaps remain: web‑source quality control and configuration‑driven data exposure.

- API is in beta; no on‑prem option. Compare with Perplexity Pro Research, Microsoft Copilot for M365, and Claude Projects before standardizing.

Breaking down the announcement

Deep Research acts like an analyst: it decomposes a question, browses the web, cross‑checks facts against your uploaded or Drive‑based materials, and returns a structured brief with citations and suggested readings. Fast Research prioritizes speed over depth for simple queries or quick source scans. Both modes keep NotebookLM’s “source‑grounded” posture-answers are meant to be traceable back to documents and URLs.

Input coverage is materially better. In addition to PDFs and Docs, teams can now bring in Google Sheets (useful for KPIs or survey data), Drive URLs and PDFs, and Word files. In practice, this means product managers can blend market notes in Docs, pricing in Sheets, and vendor PDFs, then let Deep Research fill gaps from the web and produce a single, cited deliverable.

Performance and limits will vary, but Google positions Deep Research as capable of producing multi‑page briefs in minutes. Launch materials suggest notebooks can handle on the order of 100 files and large documents, with support for 20+ file types. Expect the system to prioritize higher‑quality sources; Google indicates it filters dubious sites, though the exact heuristics and blocklists aren’t fully disclosed.

For enterprises, the integration with Google Workspace matters: SSO, centralized admin controls, and audit logs provide a governance backbone. Data is processed in Google’s cloud; there’s no on‑prem deployment. An early API is available for automation (e.g., weekly market briefs, literature reviews), but it’s still maturing and quota‑bound.

Context and competitive angle

Google is responding to rapid uptake of research agents like Perplexity’s Pro Research and Pages, along with enterprise‑grounded assistants in Microsoft 365. Compared to Perplexity, NotebookLM’s edge is deep Drive integration and multi‑file synthesis with structured outputs. Compared to Copilot in M365, Google’s web‑first agent feels more flexible for open‑web discovery, but Microsoft’s Graph grounding and SharePoint/OneDrive controls are more mature for some IT environments. Claude Projects and ChatGPT Enterprise handle multi‑file reasoning well, yet lack the native Drive/Sheets hooks many Google‑centric teams want.

What this changes for operators

If you manage research, product strategy, legal analysis, or GTM enablement, Deep Research can convert scattered inputs into repeatable, reviewable briefs. Examples include: regulatory change digests tied to your policy PDFs; competitor dossiers blending your notes with fresh web findings; or legal summaries that cite internal memos plus public rulings. The net effect is shorter cycle time, better traceability, and less context switching between tools.

However, two governance risks are non‑trivial. First, web‑source quality: impressive citations do not guarantee reliability or recency. You’ll need curation policies (approved domains, exclusion lists) and human review for impactful decisions. Second, configuration risk: Drive permissions and notebook sharing can inadvertently expose sensitive data. Ensure least‑privilege defaults, audit logging, and DLP policies are in place before scaling usage.

Adoption risks and mitigations

- Hallucinations and misattribution: Keep “cite sources” on by default; require reviewers to verify claims against links and documents.

- Reproducibility: Results can change as the web or models evolve. Archive generated briefs and source lists; snapshot key inputs for regulated workflows.

- Data residency and compliance: Confirm where data is processed and stored, and map NotebookLM usage to your GDPR/HIPAA/SOC controls. No on‑prem option means some sectors may need exceptions or alternates.

- API maturity: Pilot before automating critical workflows; monitor quotas, failure rates, and latency.

Recommendations

- Pilot with a bounded team and three high‑value use cases (e.g., competitor brief, regulatory digest, literature review). Measure time saved, factual accuracy, and reviewer edits.

- Establish guardrails: domain allow/deny lists for web sources, mandatory citation checks, and least‑privilege sharing. Turn on audit logs and DLP before broad rollout.

- Standardize prompts and outputs: create templates for executive summaries, technical deep dives, and annotated bibliographies; define when to use Fast vs Deep Research.

- Run a bake‑off: compare NotebookLM against Perplexity Pro Research and your incumbent (Copilot/Claude/ChatGPT) using the same sources. Evaluate quality, latency, governance fit, and total cost.

Leave a Reply