Executive Summary

Peec AI raised a $21M Series A led by Singular after hitting $4M ARR in ten months and onboarding 1,300 customers. Its platform tracks brand visibility, sentiment, and “source influence” across AI answers (e.g., ChatGPT), positioning Generative Engine Optimization (GEO) as a new growth channel. For operators, the signal is clear: generative search is becoming budgetable-yet measurement volatility, governance, and ROI attribution remain unresolved.

Key Takeaways

- GEO is moving from theory to tooling: 300 new customers/month, SMB pricing from €75/month for 25 prompts; enterprise from €424/month.

- Source influence isn’t about “tier 1” placement alone-LLM answers weight semantically aligned, high‑intent content (niche blogs, forums, reviews) heavily.

- Risks: model volatility, opaque ranking, platform policy changes, and unclear revenue linkage from “prompt share of voice.”

- Market will crowd quickly: Peec faces Profound (NY) and OtterlyAI (AT), while SEO suites add AI tracking.

- US expansion (NYC sales office next year) and ~40 hires suggest a land‑grab-expect rapid feature velocity and evolving metrics.

Breaking Down the Announcement

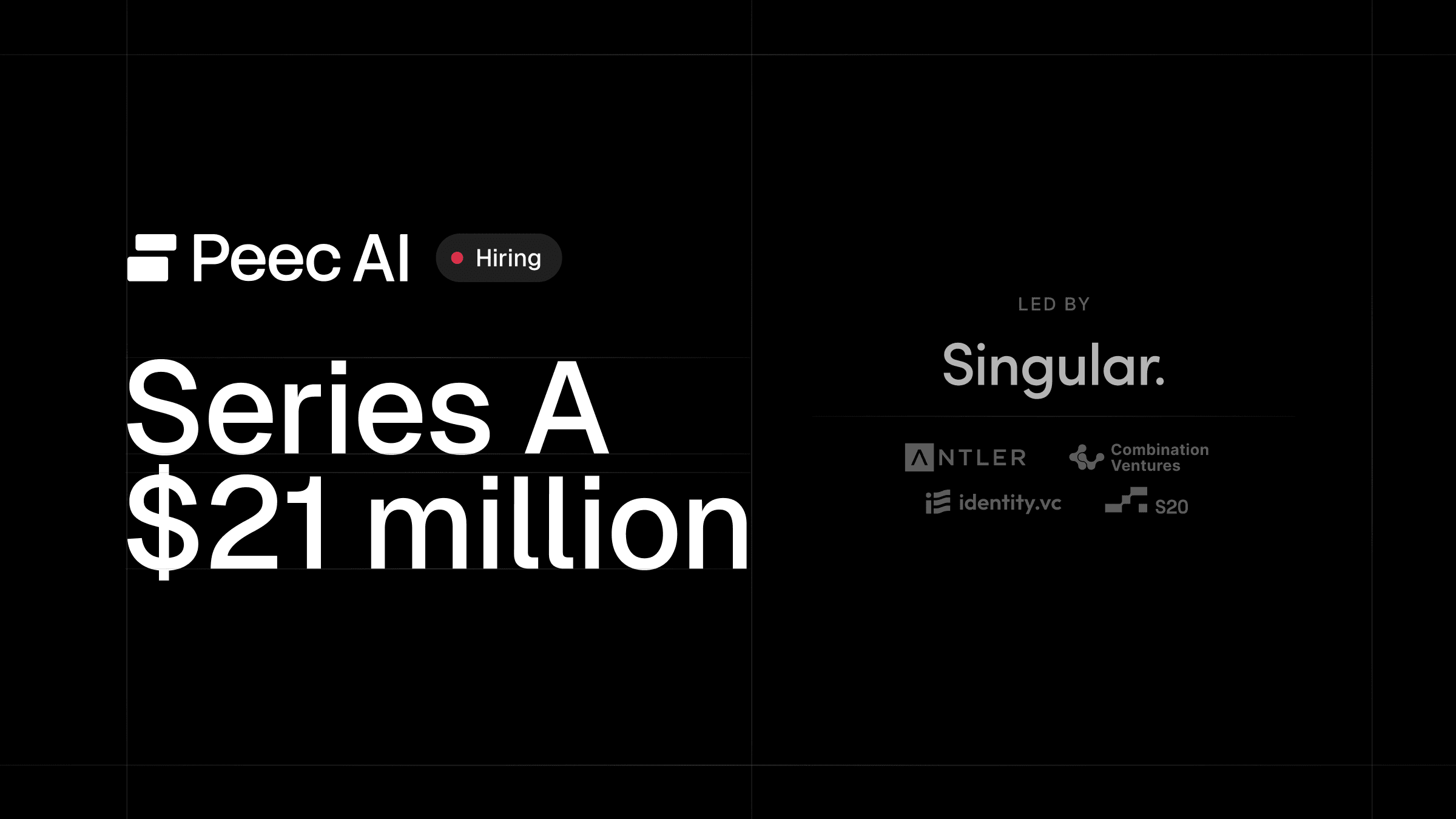

Just four months after its Seed round, Berlin‑based Peec AI closed a $21M Series A (Singular lead; Antler, Combination VC, identity.vc, S20 participating). CEO Marius Meiners says valuation tripled above $100M. The 20‑person startup plans ~40 hires in six months, with a New York sales office in Q2 next year. Customers include Axel Springer, Chanel, n8n, ElevenLabs, and TUI.

What the product does: Peec tracks how brands appear in AI answers, surfaces sentiment, and maps which sources most influence those answers. Unlike SEO dashboards centered on keywords, Peec organizes around “prompts” (e.g., “best CRMs for fast‑growing companies”). Plans start at €75/month for 25 prompts and €169 for 100 prompts; enterprise starts at €424. The dashboard proposes actions (e.g., “join r/CRM subreddit discussions”) aligned to sources LLMs appear to weight for that query.

What This Changes for Operators

This is the clearest sign yet that generative search is becoming its own performance channel. If customers increasingly ask ChatGPT instead of Google, winning “the answer box” matters more than ranking #3 on a results page that may never be opened. Peec’s pitch is a path to measure share of voice in AI answers, understand which sources sway models, and operationalize content/programming to shift outcomes.

Operationally, GEO breaks old habits. LLMs appear to reward semantically tight, intent‑specific content and credible discussion signals (forums, expert posts, reviews) over generic brand mentions. Peec’s data reinforces that a niche post titled “Best healthcare investors in Berlin” can outrank a broad Tier‑1 article for the exact prompt. That suggests reallocating budgets toward targeted long‑tail content, community engagement, and review ecosystems—less PR vanity, more intent precision.

Where GEO Works—and Where It Breaks

Strengths: Peec gives marketers a structured way to track presence and sentiment across prompts, then ties actions to observable sources (e.g., Reddit threads, niche blogs, G2, GitHub). For teams overwhelmed by shifting AI surfaces (ChatGPT, Perplexity, Gemini, Copilot), consolidating visibility and “what to do next” is valuable.

Constraints: LLM answers are stochastic and shift with model updates, user context, and ongoing training. Unlike SEO, there’s no stable “ranked index” and citation behavior varies by engine. That makes causality and ROI attribution difficult. Platform policies can also invalidate tactics—forum participation that looks like manipulation can trigger bans, and model providers can down‑rank low‑quality patterns without notice. Finally, Peec’s pipeline relies on filtering raw query datasets to isolate commerce‑relevant prompts; coverage and representativeness are critical and should be validated in pilots.

Competitive and Market Context

Peec’s advantage today is speed, focused UX, and “source insights” that translate into concrete actions. But the category will compress. SEO incumbents are adding AI answer tracking; PR intelligence tools are probing LLM influence graphs; newcomers like Profound and OtterlyAI target the same budget. Expect convergence: share‑of‑voice, sentiment, and content recommendations across AI chats plus traditional search dashboards.

If Peec sustains edge, it will be because its data pipeline stays ahead of model drift and its recommendations prove repeatably accretive to revenue, not just visibility. Enterprises should press for engine coverage (ChatGPT, Claude, Gemini, Perplexity, Copilot), geographic variance, and offline cohort analysis tying prompt‑level visibility to conversion.

Governance and Risk

Set boundaries early. Engagement in forums and reviews must follow platform rules and FTC/ASA endorsement guidance. Maintain disclosures, avoid astroturfing, and protect PII when seeding expert content. Treat GEO as experimentation: document hypotheses, avoid “set and forget,” and expect periodic re‑baselining as models change.

Recommendations

- Run a 90‑day GEO pilot: instrument 25-100 high‑intent prompts across two to three engines; track visibility, sentiment, and source influence weekly.

- Rebalance content mix: fund precise, question‑matched assets and community participation (forums, expert posts, reviews) over broad PR hits.

- Build attribution bridges: tag GEO‑driven content and use cohort‑level lift tests to link prompt share‑of‑voice to pipeline and revenue.

- Harden governance: codify participation rules, disclosure templates, and review moderation; audit quarterly for policy and ethics compliance.

- Diversify tools: evaluate Peec alongside SEO platforms’ AI modules; prefer vendors with transparent coverage, drift reporting, and API access.

Leave a Reply