Why This Outage Actually Matters

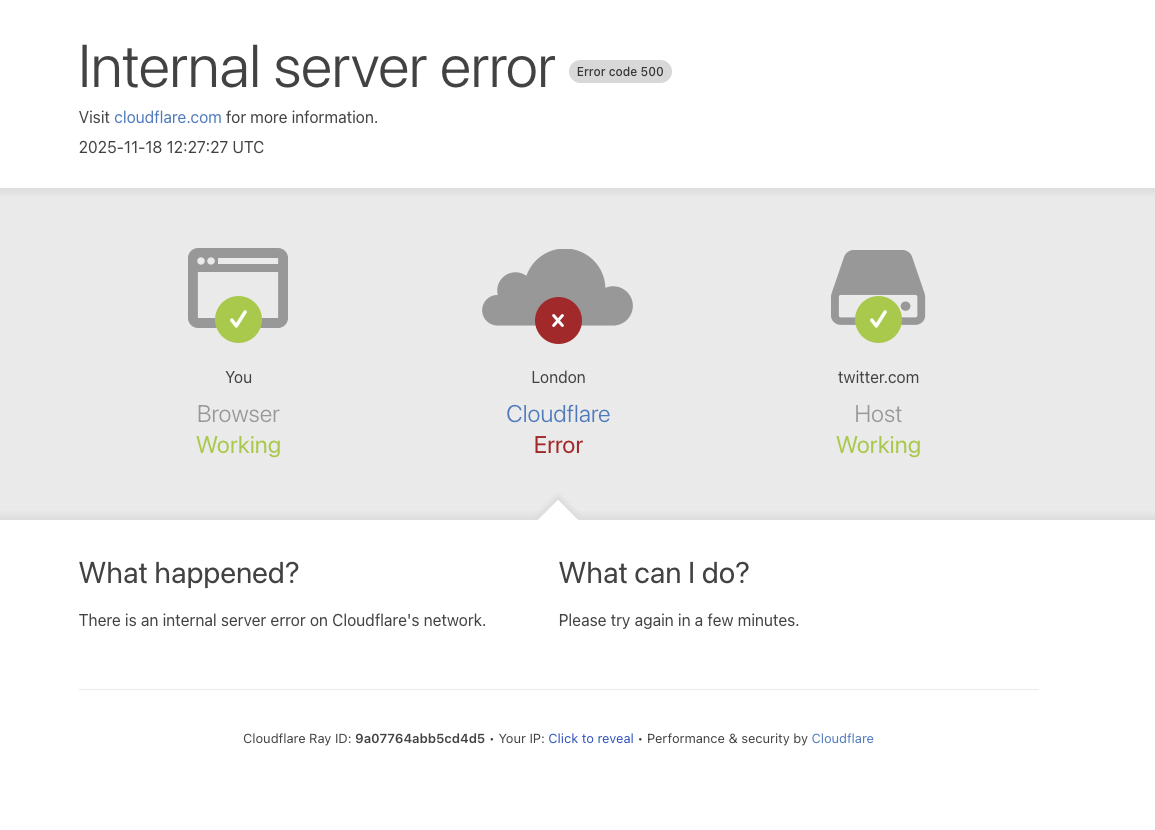

A Cloudflare outage on Tuesday disrupted access to prominent services including ChatGPT, Claude, Spotify, X, and Signal. The immediate business impact was straightforward: if your front door (DNS, CDN, edge compute, API routing) lived on a single vendor, you were down with no clean bypass. Cloudflare has identified the issue and is deploying a fix, but the bigger shift is strategic: operators can no longer treat a single edge provider as “good enough” for AI-era availability requirements.

Key Takeaways

- Concentration risk: Collapsing DNS, CDN, API routing, and edge compute into one vendor creates correlated failure modes.

- Multi-provider resilience is now table stakes: dual-authoritative DNS, multi-CDN, and distributed API gateways limit blast radius.

- Expect 10-20% overhead for redundancy; trade it for materially lower downtime risk on revenue-critical paths.

- Governance matters: SLAs don’t save you; runbooks, synthetic monitoring, and game days do.

- Design for graceful degradation: keep core flows (auth, payments, inference) operable when “smart edge” features fail.

Breaking Down the Outage

What’s new isn’t that a major network had issues-it’s that modern AI products centralize multiple tiers of availability behind one provider. In this incident, internal service degradation and API failures during maintenance cascaded across DNS resolution, CDN delivery, and edge execution. Any stack that relied on Cloudflare for authoritative DNS, caching, TLS termination, rate limiting, and Workers saw no easy failover. AI apps were hit doubly hard: even when compute was fine, the public gateway was unavailable.

This matters because AI usage spikes are bursty, global, and unforgiving. If your customer support assistant, content pipeline, or inference API is down for 30-60 minutes during peak hours, you don’t just lose requests-you burn trust and SLO budgets. The lesson isn’t “avoid Cloudflare”; it’s “avoid single points of correlated failure,” no matter the vendor.

Operator’s Perspective: Where to Add Redundancy

DNS: Run dual-authoritative DNS across two independent providers with automated zone sync (e.g., Cloudflare + Route 53, or NS1 + Azure DNS). Keep TTLs at 30-60 seconds for public endpoints and test resolver behavior quarterly. Health-checked failover on a single DNS provider is better than nothing, but it still leaves you exposed if that provider’s control plane degrades.

CDN and origin: Use multi-CDN with automatic failover and performance-based routing (Akamai, Fastly, CloudFront are common pairings). Keep a “lowest common denominator” path for your critical endpoints-no provider-specific edge features in the hot path unless you have an equivalent implementation on the secondary. Budget roughly $100/month for multi-origin features on enterprise CDNs; usage fees vary with traffic.

API gateways: Distribute ingress across two gateways (e.g., AWS API Gateway + Cloudflare, or GCP Endpoints + Fastly Compute). Steer traffic via DNS latency routing or a global accelerator. Expect an added $100/month per gateway for modest traffic before usage.

Inference endpoints: Run active-active or hot-warm across regions and, where feasible, across providers (e.g., SageMaker + Vertex AI, or an LLM API fallback such as Anthropic ↔ OpenAI with capability-aware routing). Keep prompts, safety layers, and evaluation suites in a shared repository to swap providers with minimal drift. Plan for several hundred dollars per month for standby capacity at low scale; more at production volumes.

Monitoring and game days: Synthetic checks from multiple networks (Catchpoint, ThousandEyes, or open-source with Prometheus/Grafana) plus on-call routing (PagerDuty/Opsgenie). Budget $50–$150/month for base coverage. Run quarterly failover drills that simulate DNS provider loss, CDN degradation, and edge compute unavailability.

Cost, Complexity, and Trade-offs

Redundancy adds complexity: config drift across providers, cache behaviors, and edge feature parity all require discipline. Latency can tick up a few milliseconds when traffic steering decisions add an extra round trip. And dual-provider DNS requires careful zone synchronization and consistent health checks. But the economics usually favor resilience: a modest 10–20% cost premium often offsets the financial and reputational impact of even one major outage.

Competitive Angle

Cloudflare’s integrated edge is fast and feature-rich; Fastly offers strong programmability; Akamai brings scale and mature multi-origin tooling; hyperscalers (AWS, Google, Azure) simplify colocation with compute. The winning posture isn’t picking “the best” edge—it’s making your front door portable. If you rely on Workers, Durable Objects, or Cloudflare-specific WAF rules, build a second implementation on your alternate gateway and keep it tested. The same discipline applies if your primary is Akamai EdgeWorkers or Fastly Compute@Edge.

Governance and Risk

Regulators and enterprise customers increasingly expect documented business continuity plans: quantified RTO/RPO, validated failovers, and communication protocols independent of your primary domain (stand up a status page on a separate registrar and DNS). SLAs rarely compensate the real loss; your best defense is designed-in resilience plus transparent incident comms.

What Leaders Should Do Next

- Within 30 days: Implement dual-authoritative DNS and stand up a second CDN/origin path for your top three revenue endpoints. Set TTLs to 60s and test a controlled failover.

- Within 60 days: Distribute ingress across two API gateways; remove provider-unique features from the hot path or create parity on the backup. Add synthetic monitoring from at least three independent networks.

- Within 90 days: Establish an LLM/provider fallback policy with capability-aware routing and a runbook for switching models or regions. Run a full game day simulating primary edge loss.

- Ongoing: Track dependency drift monthly, review incident postmortems, and keep comms templates ready (status page on a separate domain and DNS).

Leave a Reply