Why This Matters Right Now

Lovable, a year-old Stockholm-based “vibe coding” startup, says it hit $200M in ARR and doubled revenue in four months. The company credits staying in Europe, hiring Silicon Valley-caliber talent locally, and an active open-source community. If accurate, the milestone signals accelerating enterprise spend on AI-assisted coding and raises the bar for what buyers expect beyond autocomplete-namely, tools that scaffold full applications and workflows, not just lines of code.

For operators, the question isn’t whether AI coding will show up in your toolchain-it’s which vendor will deliver measurable cycle-time gains with acceptable risk, cost, and compliance overhead. Lovable’s claims put pressure on incumbents (GitHub Copilot, AWS CodeWhisperer, Sourcegraph Cody, Cursor/JetBrains AI) to prove end-to-end development acceleration, not incremental keystroke savings.

Key Takeaways

- Lovable reports $200M ARR and a four-month doubling-expect aggressive enterprise and mid-market outreach with “full‑stack generation” value propositions.

- European base is a strategic angle: talent retention, data residency, and EU AI/GDPR alignment will appeal to regulated buyers.

- If claims hold, latency and workflow integration—not raw model quality—are likely the differentiators powering adoption.

- ARR figures at this stage can be volatile; scrutinize seat vs usage mix, prepayments, and net revenue retention before committing.

- Security, IP provenance, and governance discipline remain the gating factors for scaling beyond pilots.

Breaking Down the Announcement

The company positions “vibe coding” as context-aware, intent-driven generation that outputs project structures, UIs, and backend logic—more like an AI pair-programmer that scaffolds full features than a code completer. Lovable cites user experience improvements that cut onboarding time by half and claims many new users ship a first project within 24 hours. It also points to hybrid infrastructure and a reported migration of a majority of inference to TPU v5 pods, saying that reduced latency materially.

On financing, Lovable says it has raised $225M+, including a $200M Series A. Public chatter places valuation in the low single-digit billions (some references cite ~$1.8B; others vary). The exact figure matters less than the signal: venture appetite for European AI infra/apps remains strong after marquee rounds for Mistral, Aleph Alpha, and others. Headcount reportedly tops 150 engineers with 40% hired from outside Sweden—consistent with the narrative that quality-of-life and research ecosystems can attract senior US talent to Europe.

What This Changes for Buyers

Most teams already trial copilots for autocomplete. Lovable’s pitch pushes the frontier to task-level or feature-level generation: “build a CRUD app with auth and billing,” “refactor this service into event-driven components,” or “create an admin UI and integration tests.” If the platform reliably scaffolds 50-70% of such work, time-to-value is stark—especially for internal tools, migration projects, and greenfield prototypes.

Latency is the silent killer in AI coding. Sub-second token streaming, deterministic retries, and smart context windows drive perceived speed more than raw model size. Lovable’s reported infra choices suggest a focus on interactive performance—important for developer adoption and for containing the “time tax” of waiting on the model during flow state.

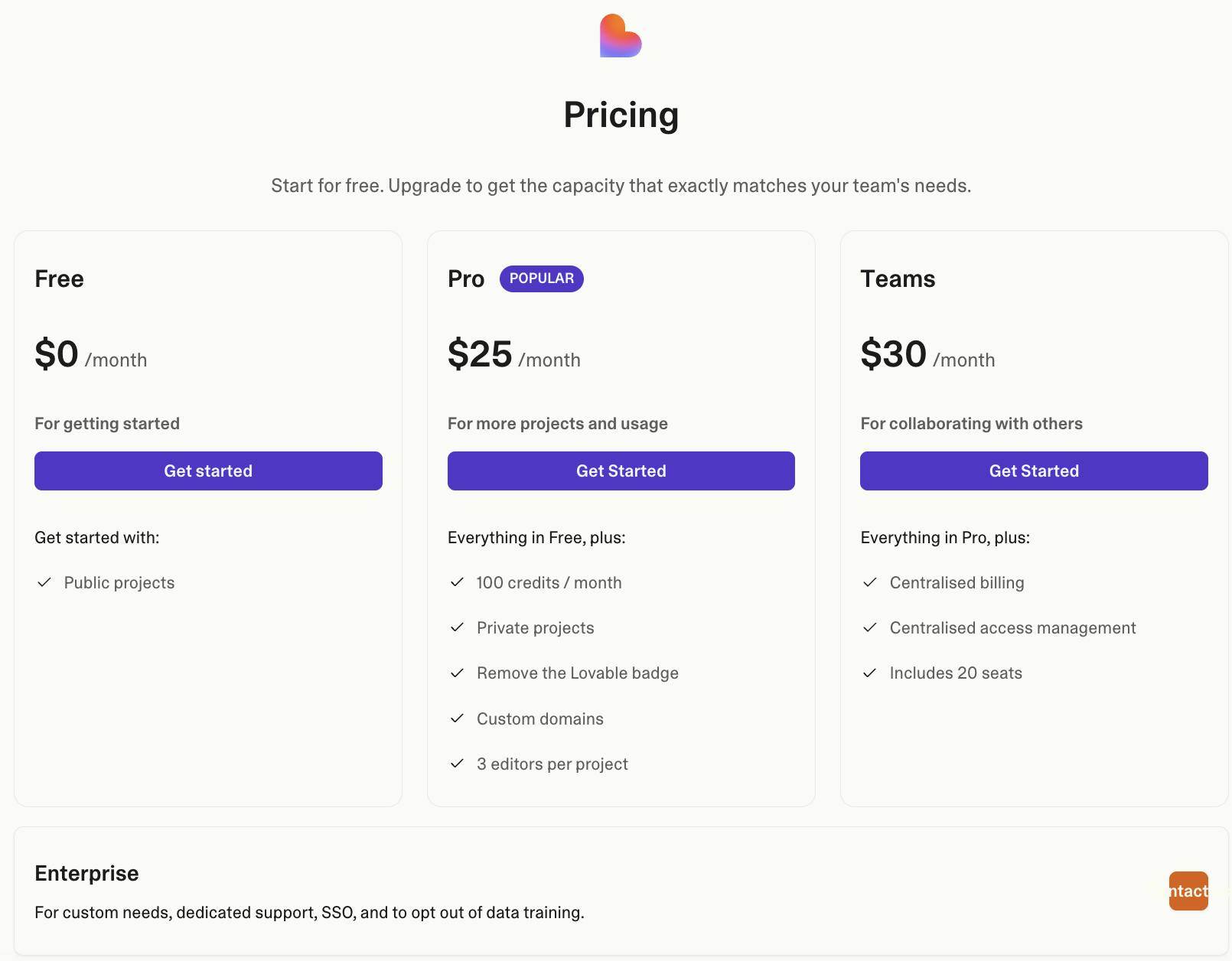

Economics will decide scale. At ~$20-$39 per seat per month, $200M ARR implies very large seat counts or substantial usage-based revenues and enterprise deals. Expect mixed pricing (seats + metered generations) and volume tiers. Build a TCO model that accounts for tokens, premium features (agents, test generation), and infra egress—then compare to Copilot/JetBrains/Cody bundles your teams already use.

Competitive Angle and Market Context

GitHub Copilot remains the default in many orgs due to distribution, but it’s primarily autocomplete with some chat and refactor flows. Sourcegraph Cody, Cursor, and Replit are pushing into agentic, repo-aware coding with deeper project context. Lovable’s differentiation story hinges on end-to-end scaffolding, tighter IDE/CI hooks, and compliance posture for EU buyers. If its open-source community is genuinely active (templates, plugins, evals), that can compress feedback loops and lower acquisition costs.

Timing helps: teams are moving from pilot to production after early productivity wins, while the EU AI Act and GDPR are forcing procurement rigor on data handling, model transparency, and auditability. A Europe-first vendor with data residency controls and independent audits can win deals Copilot can’t, provided feature depth is competitive.

Risks, Unknowns, and Governance

Revenue quality: Year-one ARR often annualizes a recent usage spike or includes large prepayments. Ask for cohort performance, NRR, and the split of seats vs consumption. Margin: If inference relies on premium accelerators, gross margins can compress unless caching, small models, or tiering mitigate costs. Product risk: Generated code quality, test coverage, and maintainability vary; without opinionated guardrails, you risk silent technical debt.

Compliance and IP: Ensure GDPR data boundaries, data retention controls, and AI Act-aligned documentation (model cards, risk assessments). For code provenance, require license filters, SBOM support, and indemnity. Security: Integrate SAST/DAST and SCA into AI-generated PRs by default; require audit logs of prompts, model versions, and diffs to satisfy internal audit and incident response.

Recommendations

- Run a 6-8 week proof-of-value on two real backlogs (internal tool + refactor). Baseline cycle time, defect density, and rework hours; require p95 latency and success-rate metrics.

- Demand enterprise controls: on-prem or VPC isolation options, zero-retention toggles, repo-scoped context, and approval workflows for multi-file changes. Lock model versions for reproducibility.

- Negotiate for transparency: monthly usage dashboards, seat/consumption split, NRR reporting, and hard budget caps. Include a 90-day exit clause and IP indemnity.

- Bake in governance: mandatory SAST/SCA on AI PRs, license guardrails, audit logs, and a DPA aligned to GDPR and the EU AI Act’s transparency expectations.

- Benchmark against incumbents: pit Lovable against Copilot/Cody/Cursor on the same tasks and datasets; choose the vendor that wins on throughput per dollar and governance fit, not demos.

Leave a Reply