TL;DR / Key Takeaways

- ChatGPT mainstreamed generative AI, driving Nvidia stock +979% and concentrating 35% of S&P 500 weight in Big Tech.

- Vendor lock-in, cost overruns, and compliance gaps are emerging bottlenecks—early pilots must budget for inference, governance, and multi-cloud.

- Decision framework: choose hosted SaaS for rapid proof-of-concept, managed cloud for scale with some control, self-hosted for strict data/compliance needs.

- Executive playbook: inventory use cases (0-3 months), hedge vendors (3-6 months), model budgets (6-9 months), invest in governance (ongoing).

Introduction — Three Years After ChatGPT, My Perspective

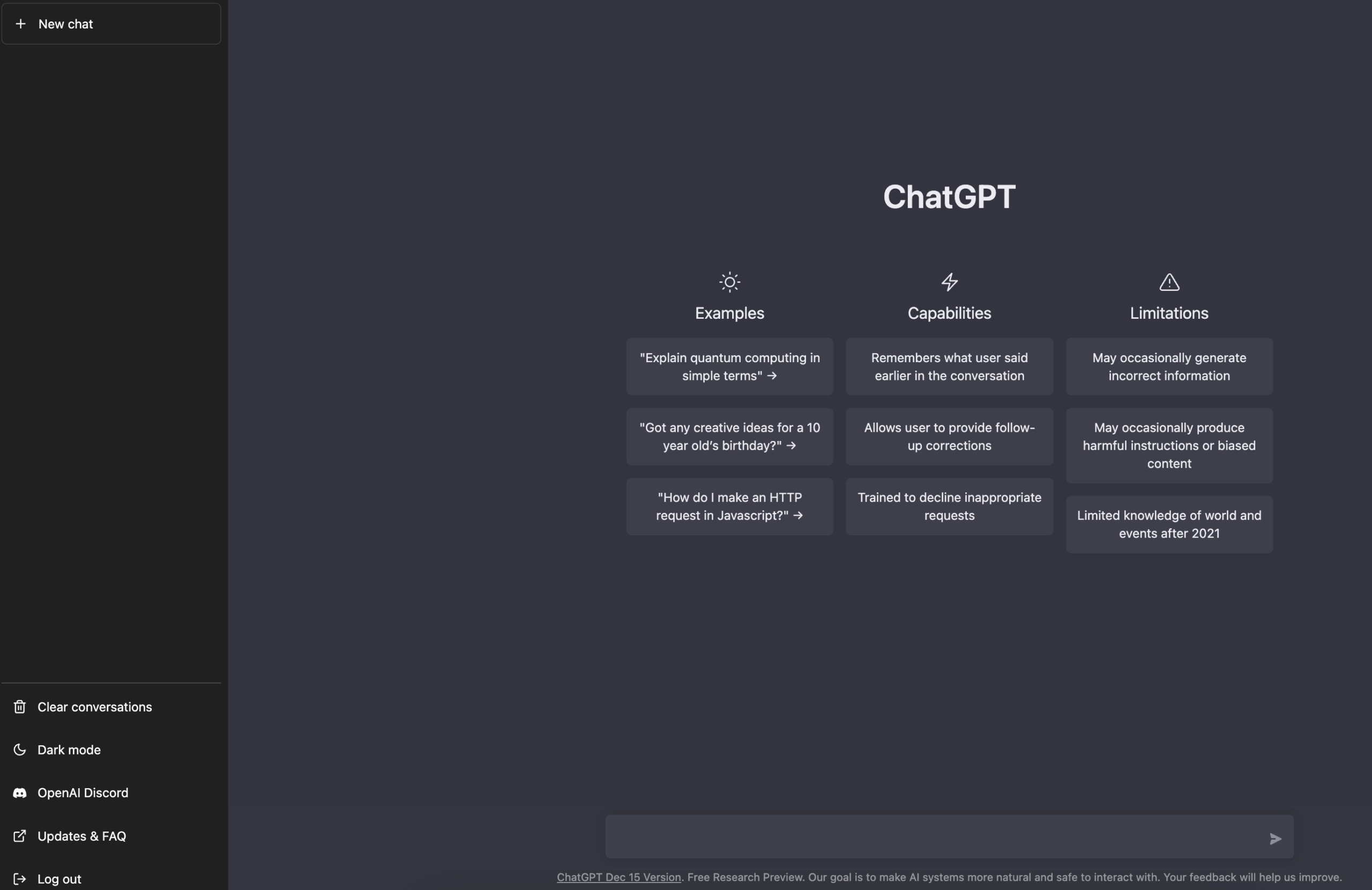

When OpenAI launched “a model called ChatGPT” on November 30, 2022, I was skeptical a consumer-grade chat bot would reshape enterprise roadmaps. Three years in, the impact is undeniable: generative AI has rewritten software priorities, redirected venture capital, and centralized outsized returns in a handful of players. Nvidia’s share price is up 979% since launch, and seven tech giants now account for 35% of the S&P 500 market weight—up from roughly 20% three years ago. As we ride this wave, leaders face a choice: chase speculative hype or build lasting value with disciplined cost and governance controls.

Breaking Down the Shift — Three Technical & Commercial Levers

ChatGPT’s debut did three things at once:

- Accessible LLMs: Conversational UI and simple APIs lowered the bar for experimentation.

- Instant Consumer Appeal: The ChatGPT app jumped to #1 in Apple’s free charts within days, spotlighting consumer demand.

- Resource Reorientation: Enterprises funneled budgets toward cloud compute and specialized GPUs, fueling infrastructure races.

Case in point: a mid-market retailer I advised cut customer-service headcount by 20% after deploying a GPT-4-powered chatbot, slashing support costs by 50%. But those savings came with a catch—monthly inference bills climbed from $10,000 to $65,000 as usage tripled.

Why It Matters Now — From Hype to Hard Reality

The “why now” trifecta is clear:

- Model Evolution: Transformers, scaling laws, and fine-tuning breakthroughs improved quality exponentially.

- GPU Supply: Cheaper, higher-capacity accelerators made large-scale deployments viable outside hyperscalers.

- Rapid Productization: Cloud and platform vendors turned demos into managed services in under six months.

Investors chasing a fresh narrative drove valuations for infrastructure companies—and the cautionary warnings have already begun. If the current froth corrects, AI-adjacent startups and service firms could see funding dry up in 12–18 months.

Vendor Trade-Offs — Hosted SaaS vs. Managed Cloud vs. Self-Hosted

No one-size-fits-all solution exists. I encourage leaders to map requirements across five axes:

- Latency: Is sub-200ms response critical?

- Cost: Do you need $0.01/inference or can you absorb $0.10?

- Data Control: Will proprietary or regulated data traverse the model?

- Compliance: Are there privacy, provenance, or audit mandates?

- Complexity: Can your team manage Kubernetes clusters or prefer turnkey APIs?

1. Hosted SaaS

- Pros: Instant onboarding, continuous updates, minimal ops.

- Cons: Limited customization, potential lock-in, data egress risks.

- Best for: Rapid prototyping, non-regulated workloads.

2. Managed Cloud Services

- Pros: Higher control, region selection, hybrid deployment options.

- Cons: Moderate ops, vendor tie-ins for hardware and network.

- Best for: Mid-size pilots needing lower latency and compliance hooks.

3. Self-Hosted Models

- Pros: Maximum data sovereignty, customizable pipelines, open-source choice.

- Cons: Heavy ops burden, patching, scalability challenges.

- Best for: Regulated industries (healthcare, finance), proprietary IP workflows.

Risks & Governance — Treat AI as a Systemic Vector

Generative AI isn’t just another feature toggle; it’s a potential enterprise-wide risk. Early pilots often skip governance, only to face painful retrofits. My checklist:

- Model Evaluation: Track hallucination and bias metrics; set acceptable thresholds (e.g., <5% hallucination rate).

- Incident Response: Define misuse scenarios, simulations, and rollback procedures.

- Legal Review: Document data provenance, licensing, and IP exposure.

- Audit Trails: Log input/output pairs, model versions, and user IDs.

In one financial services pilot, missing an audit lock meant regulators flagged undocumented risk screening. We had to rewrite the pipeline and absorb a six-figure compliance hit.

Decision Framework — A Simple Scorecard

| Criterion | Hosted SaaS | Managed Cloud | Self-Hosted |

|---|---|---|---|

| Speed to Market | ★ ★ ★ ★ ★ | ★ ★ ★ ★ ☆ | ★ ★ ☆ ☆ ☆ |

| Data Control | ★ ★ ☆ ☆ ☆ | ★ ★ ★ ☆ ☆ | ★ ★ ★ ★ ★ |

| Operational Load | ★ ★ ★ ★ ★ | ★ ★ ★ ☆ ☆ | ★ ★ ☆ ☆ ☆ |

| Cost Predictability | ★ ★ ☆ ☆ ☆ | ★ ★ ★ ☆ ☆ | ★ ★ ★ ★ ☆ |

| Compliance Readiness | ★ ★ ☆ ☆ ☆ | ★ ★ ★ ☆ ☆ | ★ ★ ★ ★ ★ |

Actionable Executive Playbook

Below is a prioritized, time-boxed roadmap to turn hype into durable value:

0–3 Months: Inventory & Prioritize

- Catalogue existing AI pilots and model dependencies.

- Score use cases by ROI, compliance risk, and technical feasibility.

- Pilot 1–2 high-value, low-risk scenarios (e.g., internal knowledge search).

- KPIs: Inference cost per 1k requests, average latency, error rate.

3–6 Months: Hedge Vendor Risk

- Implement multi-provider inference routing (30/70 split as a test).

- Create capacity buffers: reserve spot instances or on-prem GPUs.

- Benchmark fail-over times (target <200ms impact).

6–9 Months: Model Costs & Transparency

- Build chargeback dashboards for cloud and GPU spend.

- Negotiate volume-discount commitments with providers.

- Set spending alerts (e.g., $5k/day threshold).

Ongoing: Governance & Continuous Improvement

- Integrate bias and safety evals into CI/CD (weekly or per release).

- Maintain a living risk registry, revisit quarterly.

- Audit and update legal sign-off as models or data sources change.

Conclusion

Three years after ChatGPT reshaped the AI landscape, the real test is turning overnight demos into scalable, governed products. Vendor lock-in, runaway costs, and compliance gaps are emerging as critical fault lines. By applying a clear decision framework and following a disciplined playbook, leaders can capture generative AI’s promise without falling prey to speculative risks. The next wave will reward those who balance rapid iteration with robust governance—prepare now.

Leave a Reply