Executive summary – what changed and why it matters

NVIDIA released Alpamayo‑R1, an open vision‑language‑action (VLA) model aimed at Level‑4 autonomous driving research, plus the Cosmos Cookbook with step‑by‑step inference and post‑training workflows. Both are published on GitHub and Hugging Face (Alpamayo‑R1 debuted at NeurIPS 2025), and come with simulation tooling (AlpaSim) and multimodal datasets to accelerate experimentation.

- Substantive change: industry‑scale, open-source reasoning models tailored to driving – not just perception nets – are now broadly available.

- Impact for buyers/operators: reduces time‑to‑prototype for reasoning and planning research but does not replace production‑grade safety engineering or certification.

Key takeaways

- Alpamayo‑R1: NVIDIA positions it as the first open VLA for driving — integrates chain‑of‑thought style reasoning with trajectory planning; code and model weights are on GitHub/Hugging Face.

- Tooling stack: Cosmos Cookbook, AlpaSim, and Physical AI Open Datasets provide simulation, datasets, and inference recipes to run models locally or on DRIVE/DGX hardware.

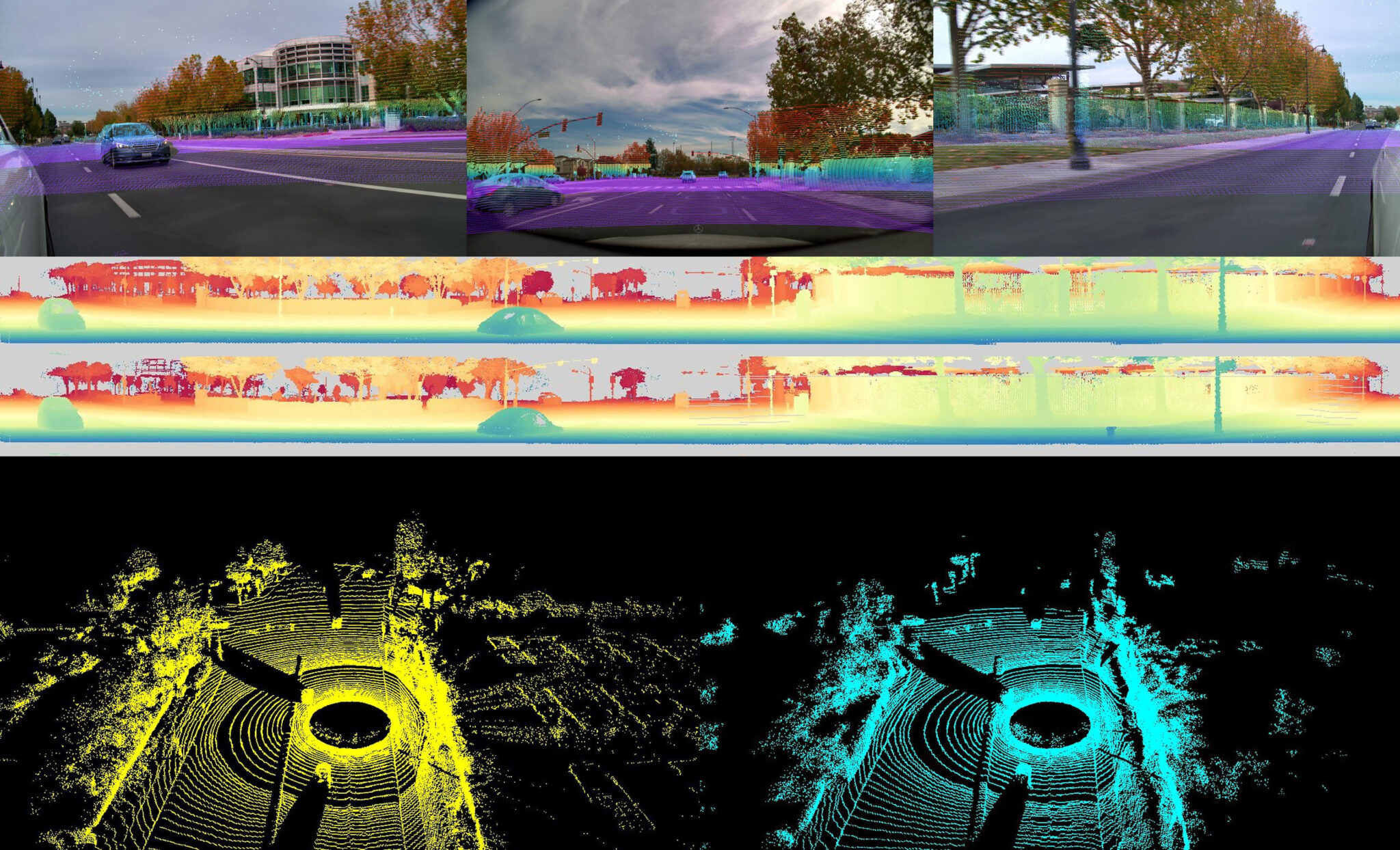

- Hardware and scale: Hyperion 9 (two DRIVE AGX Thor SoCs; 14 cameras/9 radars/1 lidar) and DGX with H100s are the intended platforms for deployment and training respectively.

- Reality check: research availability ≠ production readiness — certification, redundancy, and long‑tail validation remain required for Level‑4 systems.

- Why now: convergence of high‑fidelity simulation, large‑model reasoning, and automotive compute makes research into reasoning‑based planning tractable at scale.

Breaking down the announcement — capabilities and limits

Alpamayo‑R1 combines multimodal perception inputs with a reasoning backbone (Cosmos Reason) to produce action‑level outputs for trajectory planning and decision making. NVIDIA claims multi‑step scenario decomposition and common‑sense reasoning can better handle occlusions, atypical road users, and complex intersections. The release pairs the model with AlpaSim for closed‑loop testing and curated Physical AI datasets (camera, lidar, radar) to train and validate behavior.

Limitations: the model is a research artifact. There are no published safety certifications or formal verification results. Real‑world deployment at Level‑4 requires deterministic latency guarantees, redundant execution paths, and ISO 26262/SAE compliance — none of which are solved by open models alone. Expect significant compute: training reasoning VLA models at scale will typically need multi‑node H100 clusters; inference targets DRIVE AGX Thor for on‑vehicle real‑time runs, but validation of latency and throughput is necessary per deployment.

Why this matters now

Two trends converge: (1) simulation and synthetic data fidelity (Omniverse/Cosmos) reduce dependence on costly on‑road miles, and (2) larger reasoning models enable decision‑level outputs, not just perception. For research labs and startups, open access to an end‑to‑end stack shortens iteration cycles from months to weeks. For incumbent OEMs and Tier‑1s, it signals a shift where open models may augment proprietary stacks or accelerate validation of new planning strategies.

Competitive angle and market context

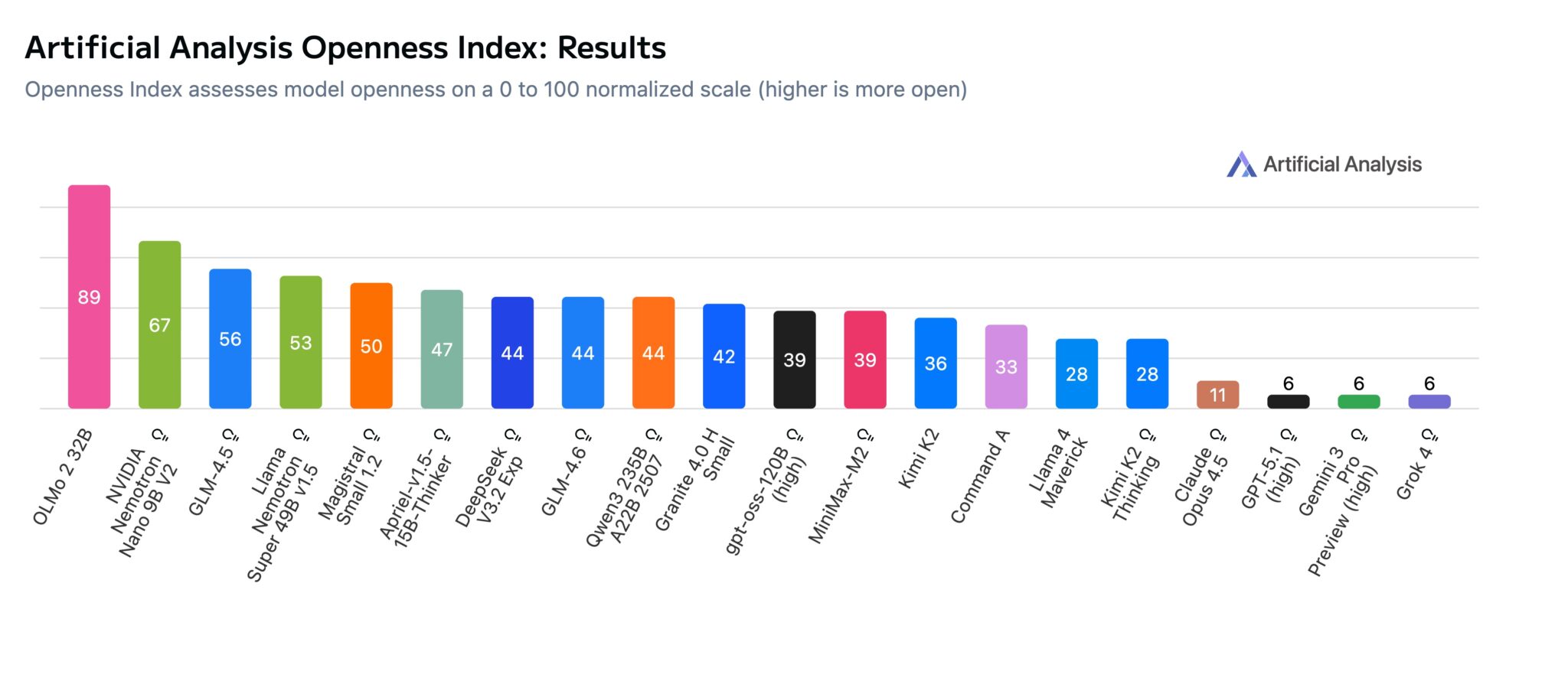

NVIDIA’s move contrasts with closed stacks from Waymo, Tesla and some Tier‑1 suppliers that keep planning models proprietary. Openness lowers barriers for academia and smaller AV teams to prototype Level‑4 behaviors. But closed systems still lead in integrated safety cases and fleet‑scale validation data. Expect a hybrid outcome: open research models for algorithmic innovation, proprietary layers for safety, monitoring and regulatory compliance.

Risks, governance and compliance

Key risks: misinterpretation of research readiness as production suitability; simulation‑to‑real gaps causing overfitting to synthetic scenarios; increased attack surface if models are used without robust runtime monitors. Regulatory scrutiny will focus on explainability, traceability and documented validation — chain‑of‑thought style reasoning can complicate auditability unless logs and causal traces are instrumented.

Practical recommendations — who should do what, now

- AV research teams: evaluate Alpamayo‑R1 in simulation with AlpaSim for corner‑case discovery and to benchmark reasoning vs. classical planners.

- OEMs and Tier‑1 product leaders: pilot in a controlled simulation pipeline; don’t fast‑track to vehicle deployment without formal safety case, redundancy and traceability plans.

- Safety/compliance teams: define audit trails and trace logging for reasoning steps; update validation matrices to include human‑like failure modes the model may produce.

- Procurement/ops: plan for increased compute (DGX/H100) and sensor calibration needs; budget for rigorous real‑world validation after simulation success.

Bottom line

NVIDIA’s open release accelerates research into reasoning‑driven autonomy and lowers the barrier to exploring Level‑4 behaviors. That’s strategically important for labs and startups. But it’s not a production shortcut: enterprises must treat Alpamayo‑R1 and the Cosmos Cookbook as research‑grade tools that demand rigorous safety engineering, regulatory evidence, and staged validation before any in‑vehicle use.

Leave a Reply