AI Won’t Move Your P&L Until You Fix Operations: A 4‑Phase Roadmap

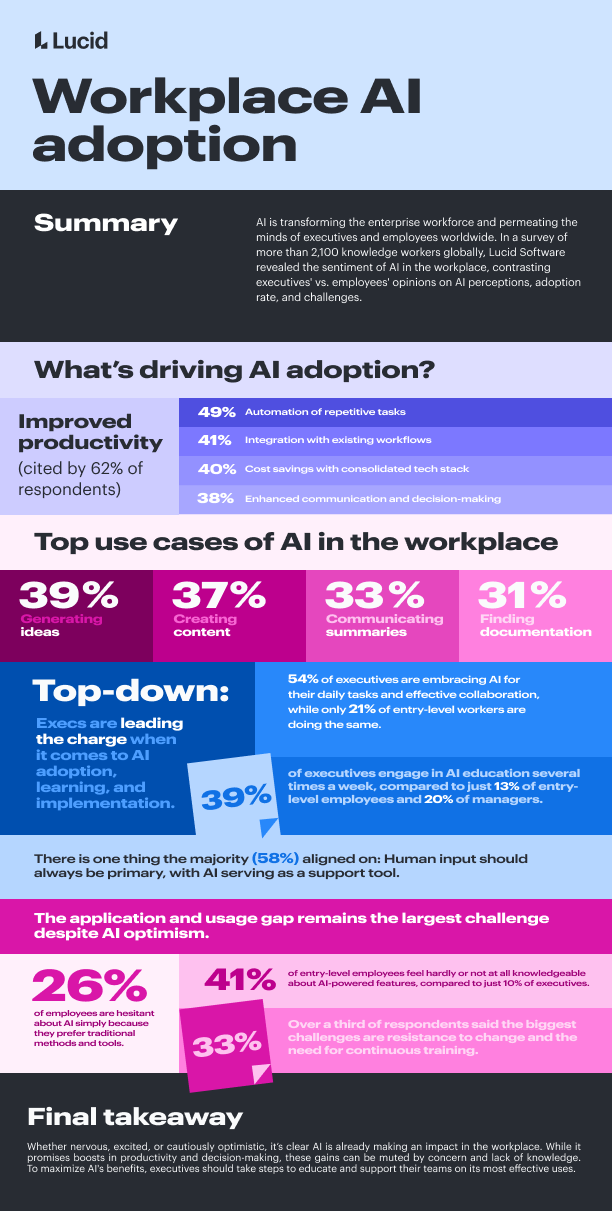

Executives keep telling me the same story: AI is everywhere in the board deck and nowhere in the P&L. It’s not for lack of ambition. A record share of S&P 500 leaders have talked up AI on earnings calls. Yet just a fraction of generative AI pilots are producing measurable profit-and-loss impact, while most stall at the “last mile” – the unglamorous work of integrating AI into daily operations. Lucid Software’s recent survey underscores why: undocumented processes, weak collaboration, and poor documentation derail AI at the very point it should create value.

Executive Hook: The AI buzz is high, the P&L impact is not

AI can summarize, draft, predict, and recommend. But it can’t fix broken processes. In fact, it amplifies them. As Bill Gates put it, “Automation applied to an efficient operation will magnify the efficiency. Automation applied to an inefficient operation will magnify the inefficiency.” If you’re not documenting, standardizing, and continuously improving, you’re subsidizing pilots that won’t scale.

Industry Context: Competitive advantage now lives in the last mile

The winners aren’t the ones with the most advanced models – they’re the ones who operationalize faster. That means turning pilots into production-grade workflows that cut cycle time, reduce downtime and defects, lower costs, and raise customer satisfaction. In our transformation work, the strongest performers pair AI with Lean, Six Sigma, and BPM disciplines, and they modernize collaboration so decisions, documentation, and dependencies live in one place. The result is not “AI for AI’s sake,” but a reliable engine for productivity and quality improvement.

Core Insight: Operational excellence is a prerequisite for AI success

Three realities I’ve seen across dozens of programs:

- Clean data and standardized processes are the fuel: Without them, models drift, outputs vary, and audit trails break.

- Collaboration is the constraint: If teams can’t co-create, decide, and document in shared tools, AI outputs never become new ways of working.

- Governance sustains value: Ethics, privacy, compliance, and performance monitoring keep solutions reliable after the pilot glow fades.

Consider three anonymized examples. A global manufacturer mapped and standardized maintenance procedures before adding AI recommendations; unplanned downtime dropped and defect rates fell. A financial services firm used a 10-week “documentation sprint” to convert tribal knowledge into SOPs, then embedded copilots into case handling – cycle times improved and rework declined. A retailer integrated AI into store operations via simple prompts in existing tools, not a new app; adoption soared because the work didn’t change, only the assistance did. In each case, operational rigor came first; AI scaled second.

Common Misconceptions: What most companies get wrong

- “Better models will fix messy operations.” They won’t. They’ll magnify variation and compliance risk.

- “Data science owns outcomes.” Operations owns outcomes; data science is a partner.

- “We’ll document after the pilot.” By then, you’ve baked inconsistency into the solution.

- “Change management is optional.” It’s the budget line that protects your ROI.

- “Big-bang deployments show ambition.” Phased scaling with controls shows discipline.

- “GenAI replaces expertise.” It augments expertise; your SOPs and guardrails make it safe.

Strategic Framework: A four-phase path from maturity to measurable ROI

Think of AI as an extension of operational excellence. Combine Lean/Six Sigma/BPM with pragmatic AI integration and governance. Use this phased approach:

Phase 1 (3-6 months): Assess and harden operational maturity

- Process baselining: Map top 10 value-streams; identify variation, bottlenecks, and data touchpoints.

- Documentation: Create or update SOPs and decision trees; shift tribal knowledge into shared, searchable docs.

- Data readiness: Inventory systems, data quality, access, and privacy constraints.

- Collaboration stack: Consolidate to modern tools that support co-authoring, visual workflows, and decisions in context.

- Governance scaffolding: Define AI policies, risk tiers, model access, and audit logging.

Phase 2 (6-12 months): Pilot high-impact, last‑mile use cases

- Prioritization by P&L: Select 3-5 use cases with clear ROI levers — downtime reduction, cost savings, cycle-time cuts, defect rate and CSAT improvements.

- Design for adoption: Embed into existing tools and workflows; minimize new clicks and context switching.

- Controls and measurement: Use A/B or staggered rollouts; set baselines and targets per KPI.

- Tooling examples: Pair enterprise platforms (e.g., Microsoft’s ecosystem) with process excellence methods (via Process Excellence Network playbooks) and AI lifecycle tools (e.g., Datategy for governance) to shorten time to value.

Phase 3 (parallel): Change management, skill-building, and enablement

- Leadership sponsorship: Visible, consistent messaging from COO/CIO with clear “why now.”

- Upskilling: Role-based prompts, SOPs, and quick-reference guides; partner with providers (e.g., CGS) for scalable training.

- Feedback loops: Capture frontline insights; refine prompts, guardrails, and SOPs weekly.

Phase 4 (12–24+ months): Scale with governance and continuous improvement

- Model ops and monitoring: Track drift, bias, performance, and cost per outcome; automate alerts and retraining.

- Risk management: Apply tiered controls by use case risk; audit privacy, regulatory compliance, and human-in-the-loop checkpoints.

- Operational cadence: Institutionalize Kaizen for AI — monthly retros, quarterly portfolio reviews.

- Centers of excellence: Leverage partners (e.g., Enlighten OPEX) to codify best practices and accelerate replication.

Action Steps: What to do Monday morning

- Appoint a joint COO–CIO sponsor and name a single accountable owner for AI outcomes.

- Pick three critical processes (not tools) and map them end‑to‑end; capture variants and handoffs.

- Run a 6–8 week documentation sprint to convert tribal knowledge into SOPs, decision trees, and prompts.

- Stand up a unified collaboration hub for planning, docs, visual workflows, and decisions with audit trails.

- Define baseline KPIs: downtime, cycle time, cost per transaction, CSAT, defect rate.

- Select 2–3 last‑mile use cases with measurable P&L impact; design minimal-change integrations.

- Create a lightweight governance charter: ethics, privacy, access, review cadence, and escalation paths.

- Launch controlled pilots with clear success/fail thresholds and a sunset plan if thresholds aren’t met.

- Invest in role-based enablement: prompt libraries, checklists, and short, scenario-based training.

- Publish results and iterate; scale only after hitting target outcomes and locking SOP updates.

Closing Thought: Make AI the next chapter of operational excellence

Lucid’s survey is a timely reminder: the barriers aren’t exotic models — they’re everyday management muscles. Document the work. Standardize decisions. Modernize collaboration. Govern what you scale. Do this, and AI stops being a slide in the board deck and starts compounding in your P&L.

Leave a Reply