What changed – and why it matters

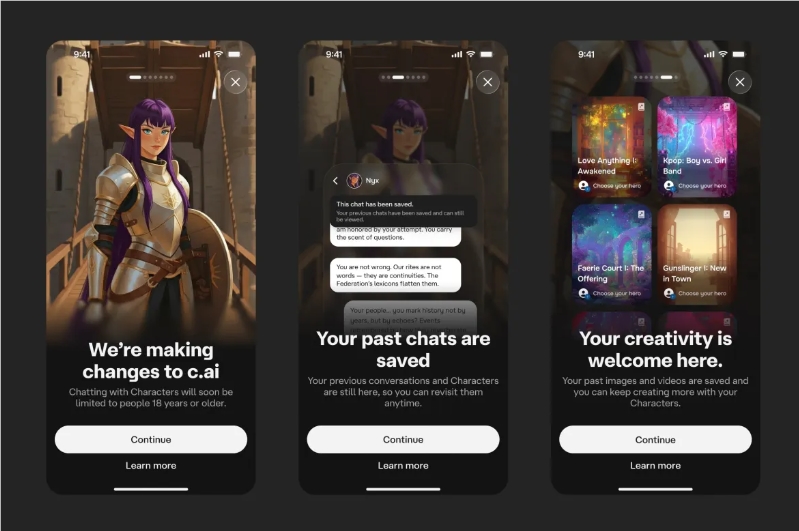

Character AI, the personality-driven chatbot platform with more than 20 million active users, has stopped allowing teenagers to use open-ended conversational chat and is pushing a new “Stories” format: a visual, branching interactive‑fiction experience. This is a strategic safety pivot designed to reduce regulatory and psychological risks tied to unsupervised, open-ended AI conversations while preserving engagement for younger users in a moderated, script‑driven form.

- Immediate change: Teen accounts (13-17) are blocked from open‑ended Character chat and routed toward Stories.

- Why now: Rising regulatory scrutiny (including an FTC probe) and documented harms-parasocial attachment, misinformation risk, exposure to inappropriate content-made open‑ended teen chat a liability.

- Business impact: Lower inference costs and clearer compliance posture, but probable short‑term drops in teen engagement and retention.

Key takeaways for executives and product leaders

- Stories trades real‑time LLM output for pre‑written branching narratives — that reduces real‑time inference and makes pre‑moderation tractable.

- Regulatory risk is reduced but not eliminated: state laws, COPPA, and FTC attention still require technical and policy controls (age verification, data minimization, parental consent workflows).

- Operational costs shift from content filtering at inference to content moderation and UX design at creation; expect new staffing and tooling needs.

- Competitively, Character AI sets a precedent other companion‑AI providers may follow to avoid enforcement risk.

Breaking down “Stories”: capabilities and limits

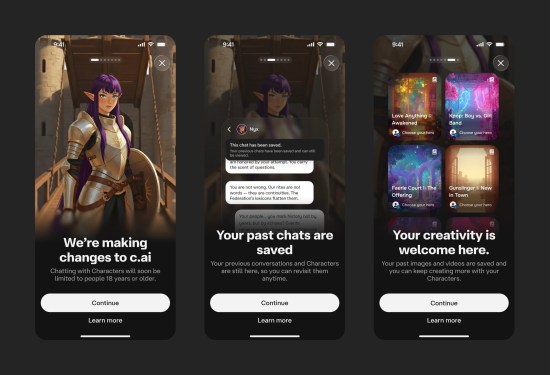

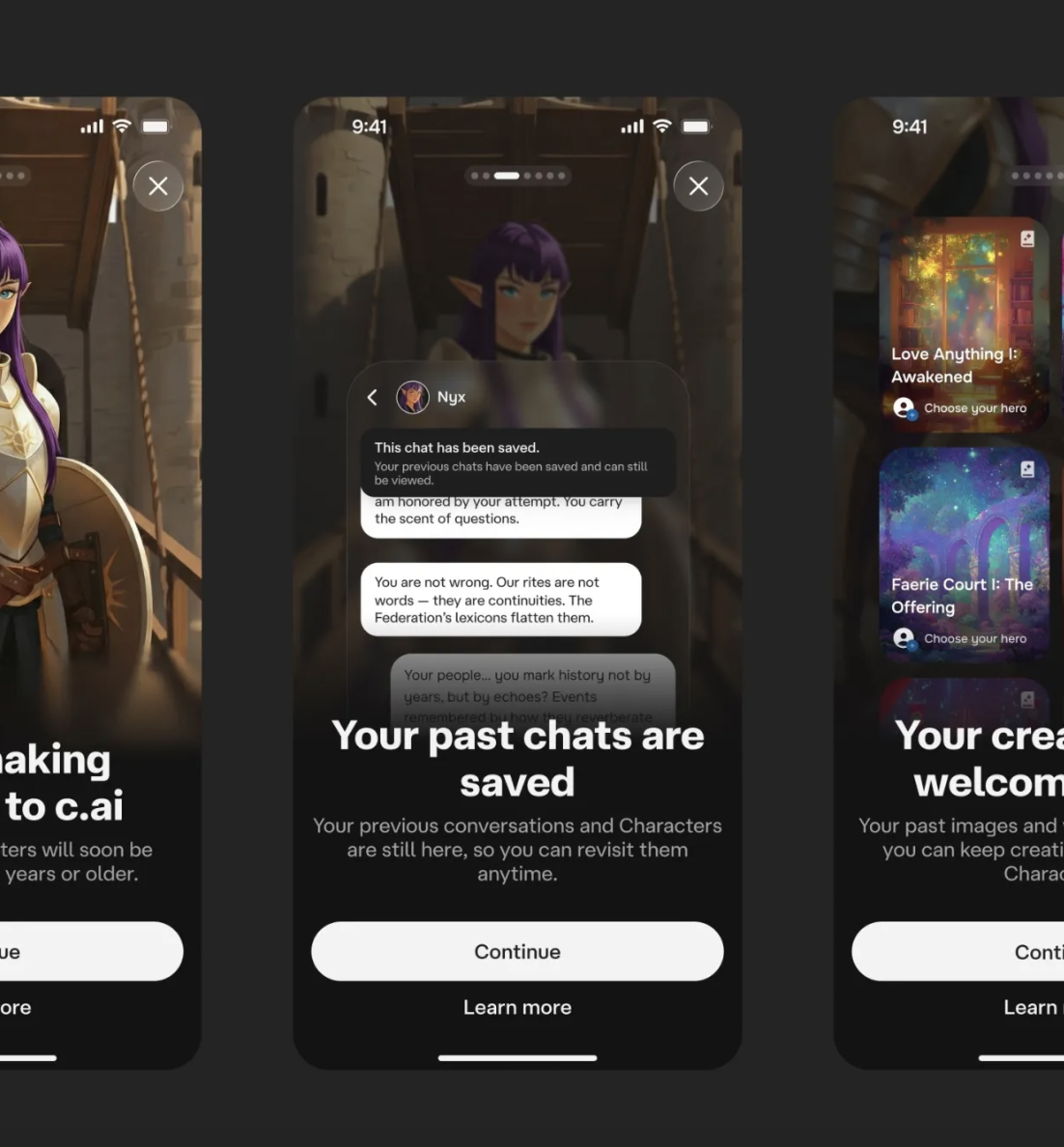

Stories replaces unscripted chat with a multi‑path narrative engine. Users navigate predefined branches, choose options, and view visual scenes rather than typing freeform prompts that generate unpredictable responses. The practical effects:

- Safety control: Content is moderated at creation or upload rather than in real time, reducing exposure to emergent toxic or manipulative outputs.

- Cost and scale: Fewer LLM inference calls per user session; lower operational compute and logging costs. This is likely a material reduction in per‑session cost versus continuous LLM chat, though exact savings depend on story complexity and media assets.

- Design trade-offs: Lower expressive freedom for teens and creators; stories can’t replicate the novelty of freeform dialog and may reduce time‑on‑site for users who value improvisational conversations.

- Discoverability: Stories are shareable via the platform’s social feed, enabling virality while keeping interactions within moderated paths.

Regulatory, safety and governance implications

Character AI’s move is defensible from a compliance perspective: it aligns with COPPA principles (minimizing data collection from under‑13 users) and creates a defensible posture in the face of the FTC’s probe into chatbot interactions with minors. But it’s not a silver bullet.

- Age verification remains a weak link — teens can misrepresent age or use adult accounts; stronger verification increases friction and liability.

- Pre‑moderation scales poorly if user‑generated stories go viral; investments in automated content classification and human review will be necessary.

- Legal exposure can persist if stories are designed to manipulate or if the platform fails to act on known harms.

Competitive context

Other vendors (OpenAI, Google, Meta) continue to face the same tradeoff: balance conversational capability with safety and compliance. Character AI’s pivot is an early example of product‑level risk mitigation that preserves engagement via a different UX. If successful, expect similar segmented experiences: structured formats for minors and full LLM access for verified adults.

Risks and unanswered questions

- Engagement risk: Teen DAU and retention may drop; long‑term brand affinity with younger cohorts could weaken.

- Moderation burden: Pre‑moderation shifts resource needs but doesn’t eliminate content moderation overhead.

- Circumvention: Teens using adult accounts or other platforms erodes the safety goal.

- Data governance: How Stories data are stored, used to train models, or audited is not yet clear.

Concrete recommendations — who should act and what to do now

- Product leaders: Run retention A/B tests comparing Stories vs limited chat flows; instrument early warning metrics (DAU, churn, session length by cohort).

- Safety & legal teams: Map data flows for Stories, update COPPA/consent workflows, and define escalation paths for harmful content.

- Engineering: Invest in automated pre‑moderation classifiers, lineage tracing for story content, and stronger age‑verification options that balance UX and compliance.

- Customer/education outreach: Pilot Stories with schools or parents to gather evidence for efficacy and to build institutional demand.

Bottom line

Character AI’s Stories is a pragmatic, defensible alternative to open‑ended teen chat that reduces immediate regulatory and safety exposure while changing the engagement economy of personality AI. Operators should treat this as an early template for “age‑segmented” AI products: adopt fast where safety is a real liability, but measure retention and abuse‑circumvention risks closely before committing to wholesale redesigns.

Leave a Reply