What Changed and Why It Matters

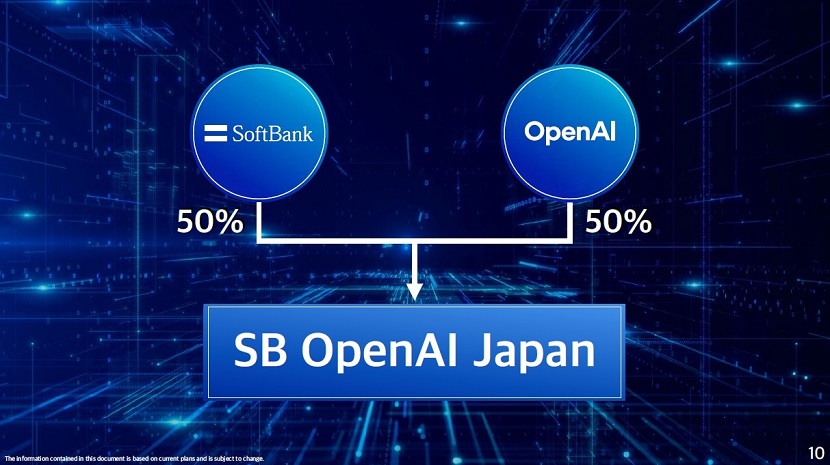

SoftBank and OpenAI formed a 50/50 joint venture, “Crystal Intelligence,” to sell enterprise AI tools in Japan. For operators, that means faster procurement, localized support, and a cleaner compliance story for deploying ChatGPT‑class capabilities at scale. But the ownership structure also revives an uncomfortable question: how much reported “AI revenue” is genuine customer demand versus capital cycling among investors, distributors, and suppliers?

Key Takeaways

- Near-term: a stronger Japan go-to-market for OpenAI (ChatGPT Enterprise, GPT‑4.1/4o, Assistants APIs), with yen invoicing and local support via SoftBank.

- Economics: pricing and latency will hinge on Azure capacity in Japan and Nvidia GPU availability; yen weakness can make imported compute materially more expensive.

- Governance: a 50/50 JV raises related‑party revenue and transfer pricing questions. CFOs should insist on clarity around principal vs. agent revenue recognition and any customer credits.

- Risk: if SoftBank funds adoption (credits, bundled discounts) while also owning the channel, reported growth could be partially circular, complicating valuation and risk assessment.

Breaking Down the Announcement

Expect Crystal Intelligence to package OpenAI’s enterprise offerings-ChatGPT Enterprise, model APIs, and domain guardrails-into Japan‑localized solutions: contact center automation, developer productivity (code assistants), and knowledge search with retrieval‑augmented generation (RAG). The stack will likely run primarily on Microsoft Azure’s Japan regions, with data residency options and enterprise controls (SAML SSO, audit logs, data retention off by default) to adhere to Japan’s Act on the Protection of Personal Information (APPI).

Operationally, SoftBank brings distribution, systems integration, and the ability to bundle AI with connectivity and cloud credits. That can shorten sales cycles and de‑risk adoption for large Japanese incumbents that prefer local contracts and support. Early deployments typically target measurable outcomes: 15-25% handle-time reduction in service operations, 20-40% faster code cycles, and lower document processing costs-results we’ve seen in comparable enterprise pilots. Your mileage will vary by data quality, workflow integration, and change management.

Industry Context: The Circular Money Problem

AI’s supply chain is tightly coupled: Nvidia supplies GPUs; hyperscalers (e.g., Microsoft) provide compute; model companies (e.g., OpenAI) consume that compute and sell AI services. When the same firms also invest in one another or finance customers, money can appear multiple times as “growth” across income statements. A SoftBank-OpenAI JV heightens scrutiny: if SoftBank (a major AI investor) underwrites adoption while co‑owning the reseller, and those revenues flow back to OpenAI (its partner), investors can overestimate independent demand.

Two things can be true at once. Genuine demand is strong—data center capacity in Japan is tight, multimodal agents are improving monthly, and enterprise AI budgets are rising. At the same time, related‑party deals can inflate top-line optics and increase systemic risk if GPU purchase commitments, customer credits, and cross‑ownership feed a “cash loop.” The TechCrunch Equity team called this out: operators should separate underlying unit economics from deal optics.

Competitive Angle: Fit by Use Case

Enterprises now have at least four credible routes in Japan:

- OpenAI via Crystal Intelligence: best-in-class multimodal capability and tool use; stronger local support; likely fastest path to pilots.

- AWS Bedrock (Anthropic Claude, etc.): deep VPC controls, enterprise guardrails, and integration with existing AWS data estates.

- Google Cloud (Gemini 1.5): long-context strengths, strong search/tooling ecosystem, and competitive pricing for high‑throughput workloads.

- Domestic models and SIs (NTT, NEC, Fujitsu, rinna, Preferred Networks): better on-prem options, data sovereignty, and Japanese language nuances at lower cost for targeted tasks.

Decision drivers: Japanese language quality on your domain data, total cost to operate (inference cost + integration + rework), data residency guarantees, and vendor neutrality. If you expect regulatory audits or need on‑prem, domestic models may be more controllable; for agentic workflows and breadth of tools, OpenAI remains a front‑runner.

What This Changes for Operators

The JV reduces friction: local contracting, yen billing, Japanese support, and a clearer APPI compliance path. That can move programs from experimentation to production faster. The trade‑offs: potential lock‑in to the OpenAI–Azure–Nvidia stack and the need to manage currency exposure (yen depreciation can raise unit costs). Also, watch whether discounts or credits embedded by a co‑owned reseller mask your true total cost of ownership (TCO).

Recommendations

- Procurement: demand a transparent bill of materials—model usage rates, Azure region, GPU class, support fees, and any credits. Include audit rights and clawbacks if usage assumptions miss.

- Finance: ask for related‑party disclosures (who funds what), and track the share of spend subsidized by vendor credits. Evaluate net TCO against AWS/GCP and domestic model baselines.

- Architecture: design for portability. Standardize interfaces so you can swap models (OpenAI, Claude, Gemini, a domestic LLM) without rewriting business logic; keep RAG, vector DBs, and guardrails vendor‑neutral.

- Compliance: lock down data residency, retention off by default, and no training on your data. Document a PIAs/ROC for APPI, with auditable logs and redaction for PII.

What to Watch Next

Signals to monitor: JV pricing discipline (seat vs. usage), the extent of customer credits, Azure capacity in Japan, and whether large deals recognize revenue on a gross or net basis. Also watch any JFTC attention to related‑party dynamics and whether domestic models close the quality gap for Japanese‑language, domain‑specific tasks. If growth depends heavily on subsidies, expect a correction; if customers renew on unsubsidized economics, this JV becomes a durable channel.

Leave a Reply