What Changed and Why It Matters

Guardio raised $80 million led by ION Crossover Partners and is rolling out a detector to find malicious code and websites built with AI “vibe coding” tools. It also signed a deal with site-builder Lovable to scan all sites on its platform and is adding consumer-grade visibility features that resemble enterprise DLP and SaaS posture management. For operators, the immediate impact is the prospect of platform-level screening that targets AI-accelerated phishing and scam infrastructure before it reaches users.

The company claims 500,000 paying users and says it hit $100 million in ARR, has tripled its valuation since 2021, and is “not a unicorn” yet. That combination-growth capital, a platform distribution deal, and a consumer-to-enterprise feature set-positions Guardio to push AI-era web safety into the build pipeline, not just the browser.

Key Takeaways

- New detector targets artifacts in code and sites produced by AI tools; first platform partnership with Lovable suggests a B2B2C path to scale.

- Guardio reports 500,000 paying users and $100M ARR; if accurate, implied ARPU is high for consumer security-buyers should ask how ARR is defined.

- Consumer “DLP-like” features surface publicly shared documents, sensitive data exposure, and accounts lacking MFA—blending identity hygiene with phishing protection.

- Accuracy, privacy, and developer workflows will determine adoption; false positives on a site-builder platform can be costly.

- Timing aligns with a surge in AI-enabled phishing and regulatory pressure on platforms to police abuse.

Breaking Down the Announcement

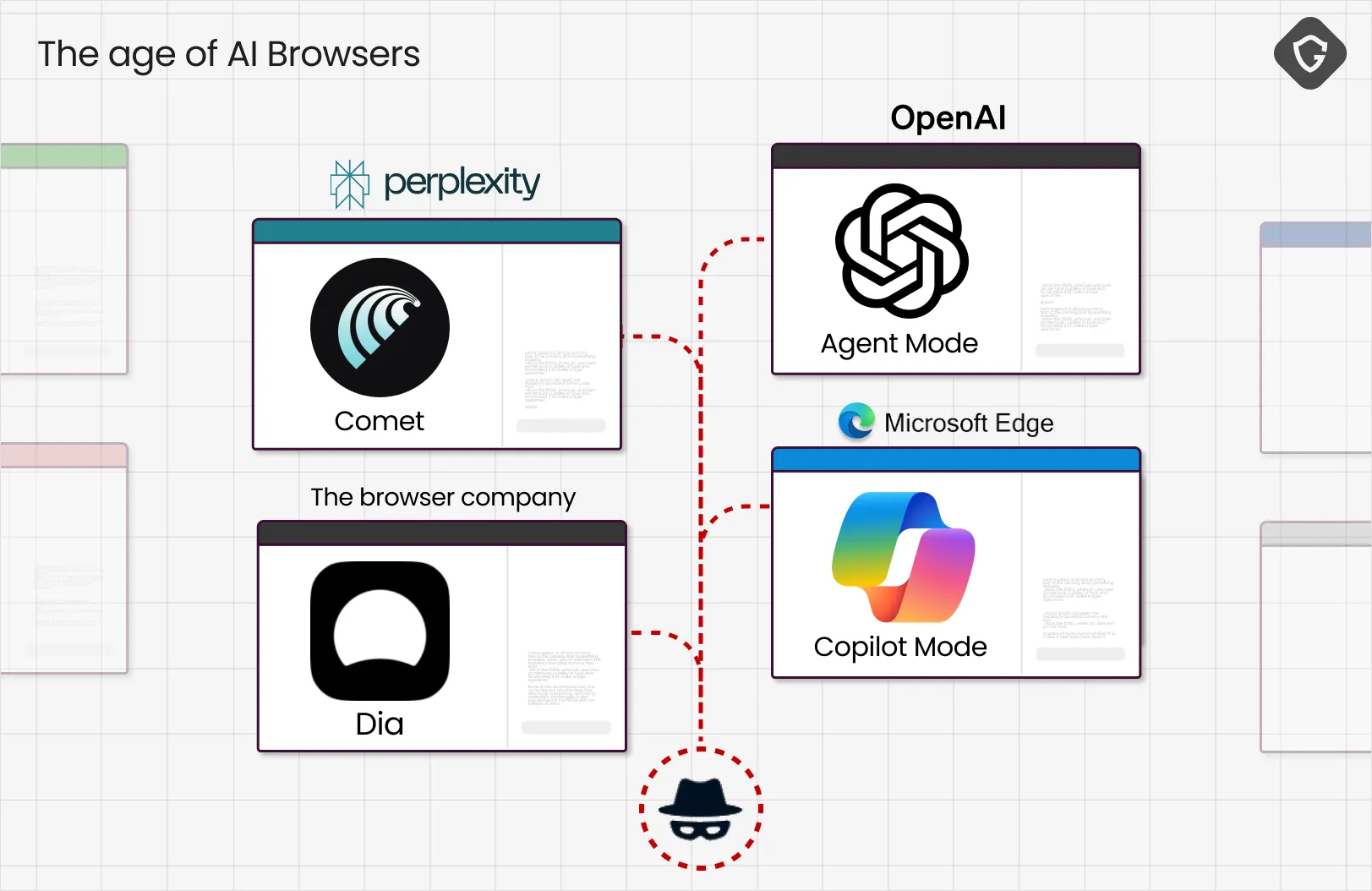

Guardio is extending beyond its roots in browser extensions that block malicious and phishing sites. The new tool analyzes code and pages for signatures and patterns associated with AI-generated output—the kinds of artifacts that “vibe coding” systems produce when they scaffold entire sites from prompts. The point isn’t to label AI content per se, but to detect malicious sites and infrastructure that AI now makes faster and cheaper to spin up.

The Lovable partnership operationalizes this at platform scale: every site built on Lovable is scanned, and those that trip risk thresholds can be blocked or reviewed. This is a meaningful shift from user-end filtering to supply-side quality control—closer to CI/CD and trust-and-safety pipelines. The deal followed reports of security gaps in Lovable-built sites, creating a clear “why now.”

On the consumer front, Guardio is rolling out visibility features that show users which documents are publicly shared, where sensitive data may be exposed, and which accounts lack multi-factor authentication. Integrations with Outlook and Facebook are planned, and some features will reach the free tier next year. These resemble lightweight DLP/SSPM capabilities adapted for individuals—surfacing risk across fragmented accounts without requiring enterprise security expertise.

Industry Context and Competitive Angle

Generative tools compress the time and skill needed to build phishing pages, fake stores, and supporting infrastructure. Traditional defenses—browser safe browsing lists, email gateways, registrar takedowns—remain essential but reactive. Guardio’s pitch is earlier detection based on code-level and site-level artifacts common to AI-generated builds.

Overlap exists with several categories: code and app security (e.g., SAST/DAST providers), phishing intelligence and takedown services, and consumer security suites (identity protection, scam filtering). What’s distinctive here is the combination of D2C scale (500k paying users), a platform integration (Lovable), and a detector aimed at AI-era patterns rather than only known indicators of compromise. If Guardio can prove materially better catch rates or faster time-to-takedown on AI-built threats, it could complement (not replace) email security and browser protections. If not, incumbents with broader distribution will be hard to displace.

Risks and Open Questions

- Detection quality: Buyers should request precision/recall on malicious site detection, time-to-detection, and false positive rates, segmented by AI-generated vs human-built content. “AI-made” is not the same as “malicious.”

- Evasion dynamics: Attackers can mask artifacts or generate adversarial code. How frequently are models and rules updated, and how quickly can Guardio respond to novel patterns?

- Privacy and governance: Account visibility features require broad API scopes. Clarify data retention, on-device vs cloud processing, token storage, and compliance (e.g., GDPR/CCPA). Independent audits and red-team reports would help.

- Business model signals: $100M ARR with 500k paying users implies roughly $200 ARPU/year—high for consumer security. Confirm definitions (GAAP vs run-rate, refunds) and cohort retention before betting on long-term viability.

- Platform impact: For Lovable and peers, operationalize review flows and appeals for flagged sites to avoid harming legitimate developers. Expect policy and UX work, not just an API call.

What This Changes for Operators

For site builders, no‑code tools, and hosting platforms, pre‑publication scanning is becoming table stakes as AI increases the volume and polish of abuse. Embedding a detector like Guardio’s in the creation pipeline can reduce downstream takedown costs and strengthen trust. For consumer-facing brands, bundling account exposure checks and phishing protection can lower fraud and support costs—provided privacy controls are explicit and opt-in.

Recommendations

- Platform operators: Pilot scanning on a subset of new sites. Track detection precision/recall, developer friction (appeals, time-to-resolution), and user harm reduction. Bake thresholds, quarantine workflows, and audit logs into your trust-and-safety playbook.

- Security leaders (SMB/consumer-facing): Evaluate whether consumer “DLP-like” visibility can meaningfully reduce account takeover and data exposure. Demand granular permission scopes, data minimization, and local processing where feasible.

- Product teams building AI site/code tools: Integrate preflight checks (malware, secrets, policy violations) and clear feedback when content is blocked. Offer remediation guidance to reduce false positives and developer churn.

- Procurement and finance: Validate Guardio’s claims with third-party references. Ask for cohort retention, refund rates, and false positive SLAs, plus independent security attestations before full rollout.

Bottom line: this is a timely move to meet AI-driven abuse at the source. The Lovable deal gives Guardio a credible path to platform-scale impact, but adoption will hinge on measurable accuracy, minimal developer friction, and strong privacy guarantees.

Leave a Reply