Why This Funding Actually Matters

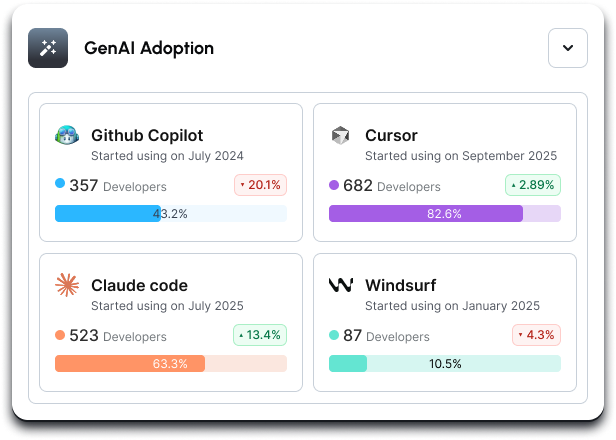

Milestone raised $10M to correlate GenAI coding tool usage with hard engineering outcomes like feature delivery speed, code quality, and bug attribution. For leaders being pushed to “get ROI from AI,” this moves the conversation beyond license counts and anecdotes toward measurable impact. Customers including Kayak, Monday, and Sapiens suggest early enterprise traction; partnerships with GitHub, Atlassian, and others signal vendor‑agnostic coverage across common developer stacks.

Key Takeaways

- Substance: Milestone builds a cross‑tool “GenAI data lake” that ties usage to engineering KPIs, including identifying bugs linked to AI‑generated code.

- Business impact: If accurate, this enables CFO‑grade ROI views and lets CTOs rationalize or expand Copilot‑style spend with evidence.

- Catch: It requires access to codebases and workflow systems-raising IP, security, and developer‑trust considerations.

- Timing: With GitHub Copilot reportedly hitting 20M users, enterprises need governance and value proof before further rollout.

- Risk: Correlation isn’t causation; naïve metrics can promote gaming (Goodhart’s law) and create surveillance concerns.

Breaking Down the Announcement

The $10M seed round is led by Heavybit and Hanaco, with participation from Atlassian Ventures and angels including GitHub cofounder Tom Preston‑Werner, ex‑AT&T CEO John Donovan, Accenture’s Paul Daugherty, and Datadog’s former president Amit Agrawal. Milestone’s pitch: connect four data pillars-codebases, project management platforms, team structure, and codegen tools-into a unified telemetry layer that shows which teams use AI, how it affects delivery, and where AI‑generated code may introduce defects.

CEO Liad Elidan and CTO Professor Stephen Barrett (Trinity College Dublin) emphasize enterprise‑grade focus and integrations. The company is partnering with GitHub, Augment Code, Qodo, Continue, and Atlassian to keep pace as coding assistants shift from autocomplete to chat to agentic workflows. Early feedback, per Milestone, is that customers expand rather than cut GenAI licenses once they see data—useful, but still an anecdotal vendor claim rather than a public benchmark.

What This Changes for Operators

Most teams track Copilot‑style adoption via license utilization and subjective developer surveys. That doesn’t convince finance. If Milestone reliably ties usage to DORA‑like metrics (lead time, change failure rate), code review latency, escaped defects, and rework, leaders can run controlled rollouts: which squads actually ship faster without degrading quality? Where do AI‑heavy commits correlate with post‑release incidents? This moves AI from “belief” to measurable continuous improvement.

Practical scenarios become possible: require enhanced review or additional testing when a PR includes a high proportion of AI‑suggested code; compare throughput before/after enabling chat‑based assistants; identify teams achieving gains and document their workflows for broader rollout. If you can quantify both lift and regression, you can reallocate licenses, tune policies, and justify spend with confidence.

Risks, Gaps, and Governance

- Data access and IP: The platform needs deep hooks into repos and work systems. Demand clarity on data residency, encryption, audit logging, least‑privilege scopes, and whether on‑prem/private‑VPC deployment is available. Require SOC 2/ISO attestations and a robust DPA.

- Attribution accuracy: “AI‑generated bug” detection depends on instrumenting editor events, commit metadata, and tool telemetry. Expect false positives/negatives; use as a signal, not a verdict.

- Causation vs. correlation: Improved cadence might reflect parallel process changes or staffing. Design A/B or staggered rollouts to isolate effect sizes.

- Metric gaming: Over‑emphasis on output proxies (e.g., PR counts) can degrade quality. Stick to balanced scorecards (e.g., lead time + change failure rate + post‑release defects).

- Developer trust: Broad telemetry can feel like surveillance. Communicate scope, purpose, and guardrails; avoid individual‑level punitive dashboards.

- Early product risk: Seed‑stage platform maturity and feature stability may vary. Validate roadmap fit and integration depth before scaling.

Competitive Angle

GitHub Copilot Business/Enterprise and JetBrains AI provide usage analytics, but primarily within their ecosystems. Engineering analytics platforms like Jellyfish, LinearB, Uplevel, Swarmia, and Code Climate Velocity quantify delivery metrics yet lack deep, cross‑assistant telemetry or AI‑specific bug attribution. Security tools (e.g., SAST/DAST) catch vulnerable code but don’t tie issues back to AI usage or ROI. Milestone’s pitch is cross‑vendor correlation—pulling from code, PM, org structure, and multiple AI tools—aimed at answering the CFO’s “Is this worth it?” question. The moat will be breadth of integrations, attribution accuracy, and enterprise‑grade data controls.

Recommendations

- Define hypotheses up front: e.g., “Copilot + chat reduces lead time by 15% without increasing change failure rate.” Pick 2-3 KPIs that balance speed and quality.

- Run a 60-90 day pilot: Choose 3-5 squads with similar work types; stagger enablement to isolate effects. Compare before/after and against control teams.

- Harden governance: Require read‑only, least‑privilege access; review data flows, retention, and residency. Get SOC 2/ISO reports and negotiate a DPA with incident SLAs.

- Avoid surveillance optics: Aggregate to team level for stakeholders; keep individual data for coaching only with explicit consent and clear policies.

- Operationalize guardrails: Flag PRs with high AI‑generated content for extra tests or senior review; track whether guardrails reduce incidents without stalling throughput.

- Plan for vendor churn: Ensure the platform supports multiple assistants and evolving agentic workflows so telemetry stays useful as your tool stack shifts.

Bottom line: If you’re scaling coding assistants, you need an observability layer that ties usage to outcomes. Milestone’s approach is promising, but treat it like any critical telemetry system: pilot rigorously, validate attribution, and bake in governance before you bet your AI roadmap on the findings.

Leave a Reply