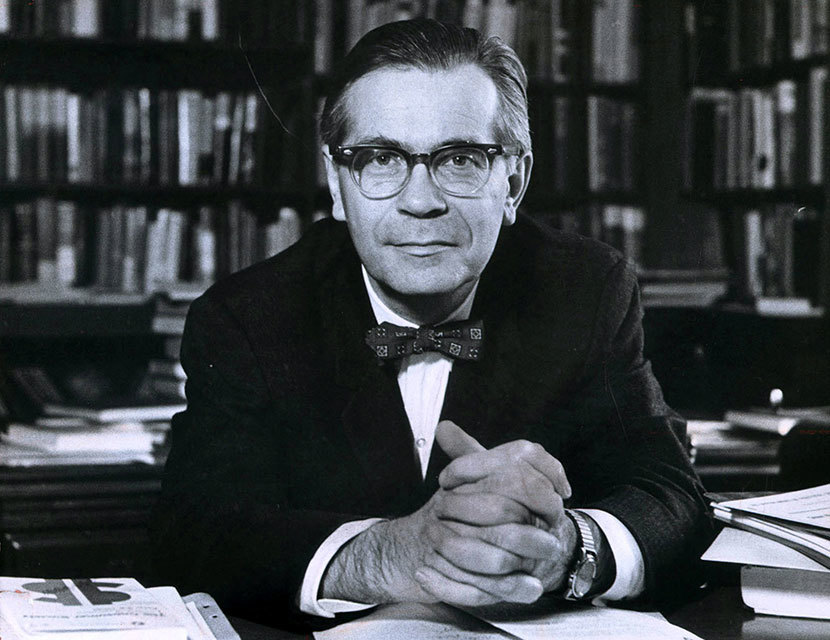

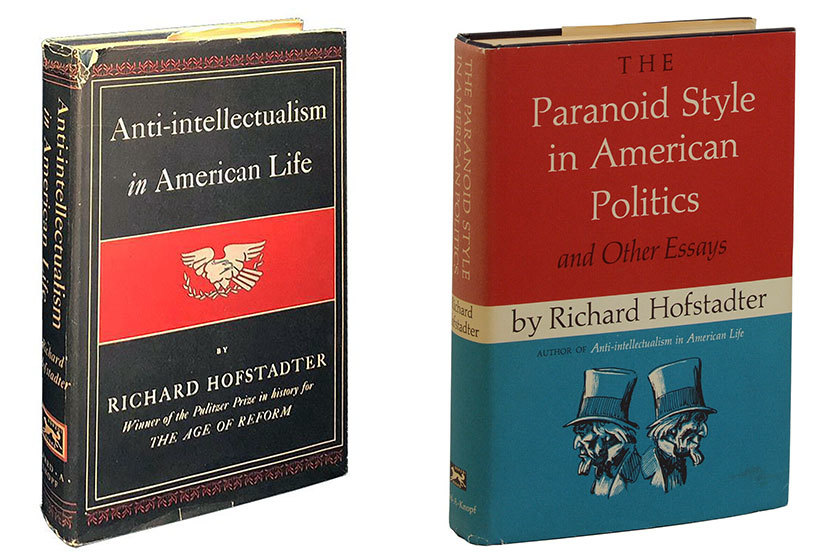

By now, we’ve all felt it: mistrust spreads faster than truth. Decades before smartphones and social feeds, Richard Hofstadter mapped the “paranoid style”-the psychological pull toward narratives that assign intent, connect everything, and explain away uncertainty. Today’s digital platforms, AI-driven personalization, and fractured media ecosystem have turned that impulse into an always-on engine. It has never been easier to become, or be influenced by, a conspiracy theorist. That’s not just a civic problem-it’s a material business risk.

Executive Hook: Trust is your new uptime metric

Conspiracy beliefs have leapt from the fringes into mainstream discourse, accelerated by algorithmic feeds, creator economies, and synthetic media. The impact is measurable: slower product adoption, stalled partnerships, employee unrest, and regulatory scrutiny. I’ve sat in enough war rooms to know-misinformation incidents don’t behave like PR crises. They behave like distributed systems failures. If you don’t instrument them, they cascade.

Industry Context: Why leaders must treat misinformation as an IT and governance issue

What changed? The cost of narrative formation collapsed. A single creator with a smartphone, a micro-community, and a handful of AI tools can now outpace legacy communications functions. Research from organizations like the World Economic Forum and the Reuters Institute has highlighted mis/disinformation as a top short-term global risk and a persistent drag on institutional trust. Meanwhile, executive surveys consistently show that roughly 88% of C-suite leaders prioritize AI to boost productivity and innovation—raising the stakes for trustworthy deployment and change management.

For enterprises, that convergence translates into concrete exposure across four vectors: erosion of customer trust and adoption, internal workforce disruption, reputational damage that compounds across markets, and increased regulatory/compliance risk. This is not solved by a sharper press release. It requires telemetry, design decisions, workforce enablement, and regulator-ready governance.

Core Insight: Design, emotion, and AI are the real leverage points

Conspiratorial thinking isn’t just “bad information”; it is an emotional operating system. Products that are ambiguous, opaque, or high-friction invite folk theories to fill the gap. Add algorithmic feeds that reward outrage and pattern-seeking, and the result is a fertile substrate for event, systemic, and even “superconspiracy” narratives to spread.

AI is double-edged. Generative tools can cheaply fabricate convincing audio, video, and text—and supercharge narrative velocity. But AI can also reduce susceptibility when used well. Controlled studies have shown that AI-powered dialogue systems can decrease endorsement of conspiratorial claims by up to 20%, especially when they engage respectfully, provide transparent sourcing, and acknowledge emotion. The takeaway: AI won’t “solve” misinformation, but it can be a net positive if embedded into product, policy, and people practices with intent.

Common Misconceptions: What most companies get wrong

- “This is a communications problem.” It’s a systems problem. Without monitoring, product guardrails, and governance, comms alone is a bandage.

- “More facts will fix it.” Facts matter, but tone and timing matter more. People adopt stories that reduce uncertainty and affirm identity.

- “Our employees won’t fall for this.” They will—and internal narratives spread faster than external ones if you don’t communicate early and often.

- “If it’s not illegal, it’s not our risk.” Regulators move faster when trust erodes. Expect inquiries on disclosures, safety claims, data use, and AI.

- “We can’t measure narratives.” You can. Treat narratives like incidents: detect, triage, respond, and learn with clear metrics.

A Five-Phase Strategic Framework: Defend trust while you scale AI

This phased approach has worked across multiple transformations I’ve led. Start small, instrument aggressively, and iterate. Timelines are indicative; adjust to your risk profile.

Phase 1 (Days 0-30): Sense and baseline narrative risk

- Stand up a cross-functional “Trust Ops” pod (CIO, CISO, CCO, Legal, HR) with a 24/7 escalation path.

- Deploy a listening stack: social listening, app store and community scraping, threat intel, and media monitoring. Add lightweight anomaly detection for spikes in narrative velocity.

- Define incident metrics: Narrative mean-time-to-detect (MTTD), mean-time-to-respond (MTTR), exposure index (reach × sentiment × credibility), and conversion impact (trial, churn, support volume).

- Baseline your top 10 narratives by product, market, and stakeholder segment.

Phase 2 (Days 30-90): Build transparent, emotionally attuned communications

- Publish a “How we know” standard: cite sources, show uncertainty ranges, and explain trade-offs in plain language.

- Create narrative playbooks with pre-approved language for high-likelihood claims (safety, data use, pricing, layoffs).

- Pilot AI-assisted response copilots that generate empathetic, source-linked replies; keep humans-in-the-loop for judgment calls.

- Establish SLAs: acknowledge within 2 hours, evidence-based update within 24 hours, full post-incident report within 7 days.

Phase 3 (Days 60-120): Embed ethical and privacy-by-design into products

- Reduce ambiguity in UX: add plain-language explainability for models and decisions that affect users; provide provenance labels (support C2PA where possible).

- Instrument consent: make data flows visible, revocable, and auditable. Dark patterns feed conspiracy narratives.

- Add “skepticism moments”: friction for high-risk actions (e.g., share dialogs that preview claims and sources before posting).

- Red-team features for mis/disinformation abuse scenarios; track mitigation backlog like security vulnerabilities.

Phase 4 (Days 0–90, then ongoing): Enable and protect the workforce

- Train managers and frontline teams to recognize narrative cues and respond without escalating. Provide approved responses and escalation paths.

- Offer well-being guardrails for AI-driven change: set expectations, avoid “always-on” rollout stress, and measure burnout risk. Remember: 88% of C-suites prioritizing AI means your people are processing nonstop change.

- Run quarterly simulations (tabletop exercises) that combine security, comms, and product teams to rehearse cross-channel misinformation incidents.

Phase 5 (Days 90–180): Engage regulators and industry coalitions

- Proactively brief regulators on your transparency, safety, and AI governance controls; document testing and post-incident learning loops.

- Join or form coalitions (e.g., standards around content authenticity, safety disclosures). Align with guidance emerging from McKinsey, Stanford HAI, and the World Economic Forum.

- Publish an annual Trust & Safety report with metrics, case studies, and roadmap commitments.

What “good” looks like in 6 months

- 48-hour Narrative MTTD, 72-hour MTTR for priority markets.

- Clear, public AI use disclosures embedded in product and policy.

- Measurable reduction in support volume tied to misinformation spikes (target 15–25%).

- Fewer regulatory surprises; faster, more constructive dialogues with authorities and media.

Action Steps for Monday Morning

- Appoint a Trust Ops lead and schedule a 60-minute risk triage across CIO, CISO, CCO, Legal, HR.

- Spin up a listening dashboard with three inputs this week: social, support tickets, and app store reviews. Tag top 10 narratives.

- Draft and approve two narrative playbooks: “Data use and privacy” and “Product safety/performance.”

- Enable provenance signals in one high-traffic surface (e.g., watermark key marketing assets; add model explanations to a top feature).

- Plan a 2-hour tabletop exercise for executives within 30 days, using a realistic misinformation scenario.

Leaders don’t need to eradicate conspiracy thinking to compete. You need to shrink its blast radius. Treat trust like uptime: instrument it, rehearse for failure, and design for recovery. With the right telemetry, humane communications, responsible AI, and steady governance, you can scale innovation without trading away the one asset everything else depends on—credibility.

Leave a Reply