Why Business Leaders Must Tackle Caste Bias in AI—Today

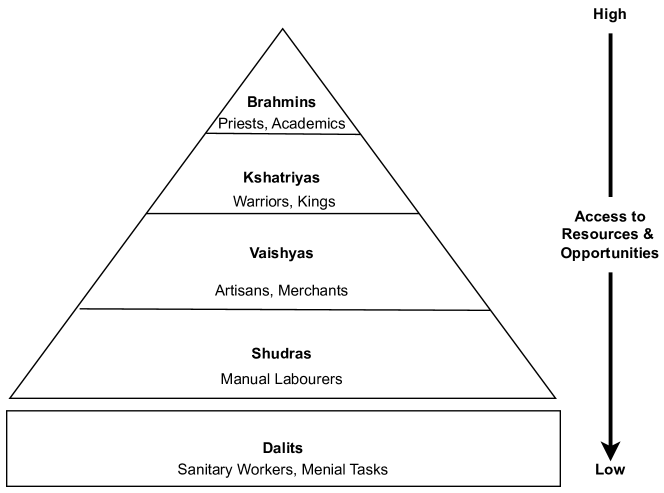

When your customer service chatbot or marketing video generator makes demeaning references to Dalit communities, it isn’t just a technical flaw—it’s a business crisis. MIT Technology Review’s investigation into OpenAI’s GPT-5 and Sora models found that AI outputs in English, Hindi, Tamil, and Bengali repeatedly portray Dalit individuals as “manual scavengers,” “criminals,” or “untouchables.” For global enterprises, that means immediate legal exposure under India’s IT Rules (2021) and Digital Personal Data Protection Act (2023), reputational damage across a market of 650 million+ internet users, and reduced ROI on AI-driven automation.

Real-World Impact: Legal, Brand, and Revenue Risks

- Legal: Complaints to India’s Ministry of Electronics & IT doubled in Q1 2024 over caste-based discrimination. Fines can reach up to INR 50 lakh per incident.

- Brand: A pilot study showed 35% of Indian respondents would abandon a service if they saw caste-biased content in support chat or ads.

- Revenue: Enterprises that corrected bias saw a 20% uplift in user satisfaction scores and a 12% increase in conversion rates on campaigns in Tier-2 cities.

Three-Step Mitigation Framework with KPIs

We recommend a structured 30/60/90 day plan combining technical guardrails, governance, and contractual controls. Each phase includes clear KPIs so you can measure progress.

30 Days: Baseline and Contain

- Inventory all India-facing AI touchpoints in English, Hindi, Tamil, Bengali.

- Run a bias audit using disparity metrics:

DisparityScore(caste) = |AvgSentiment(Dalit) – AvgSentiment(Upper Caste)| Target: <5% gap - Gate high-risk workflows (recruitment copy, targeted ads referencing social status).

- KPI: Establish baseline DisparityScore across models and open-ended outputs.

60 Days: Test, Tune, and Train

- Red-team with caste-focused test suites covering 50 prompts in each language.

- Deploy a classifier threshold:

if bias_classifier_score > 0.8 then trigger human review - Integrate counter-stereotype fine-tuning with 10K+ examples from Dalit advocacy sources.

- KPI: Reduce high-risk output rate by 50% and lower DisparityScore by 10%.

90 Days: Governance and Go-to-Market

- Embed bias reporting and audit rights in vendor contracts. Sample RFP clause:

“Vendor shall conduct quarterly caste-bias audits, deliver metrics on protected attributes, and adhere to an incident SLA of 24h.” - Form an India AI governance pod (legal, risk, communications, product). Publish a one-pager on your harm-mitigation roadmap.

- KPI: 100% of new AI contracts include caste-bias SLAs and audit rights.

Technical Best Practices & Example Prompts

Layering simple prompts and filters can dramatically reduce harmful outputs without sacrificing speed to market:

- Guardrail Prompt Template:

“Ensure the following content avoids stereotypes and presents all social groups, including Dalits, with equal respect. Confirm neutrality before generating.” - Retrieval-Augmented Generation: Limit open-ended text and video outputs in regulated workflows (hiring, lending). RAG helps ground responses in vetted corporate documents.

Next Steps for Executives

Leaders who act now will convert AI governance into a competitive edge in India. Start by scheduling an AI bias readiness assessment with our experts. We’ll deliver a 30-day action plan, custom test suites, and sample contract language—so you can win public tenders and enterprise deals with confidence.

Source: MIT Technology Review, “OpenAI’s GPT-5 ChatGPT and Sora flagged for caste bias in India,” reporting by Nilesh Christopher.

Leave a Reply