THE CASE: A 12-Point Scorecard For An Unruly Technology

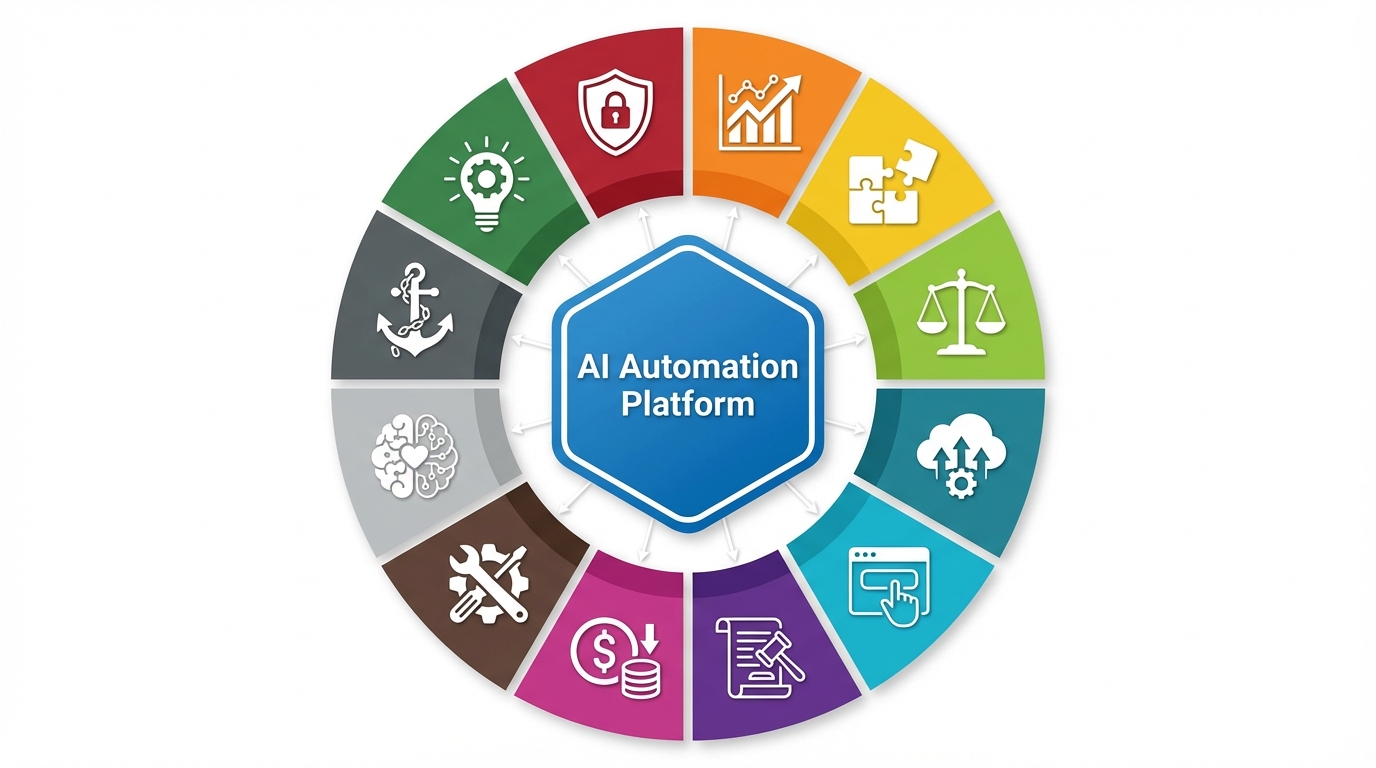

By 2026, enterprise AI automation had moved from pilots to board-level commitments. CIOs and procurement teams were no longer asking whether to adopt AI platforms, but which ones could handle real workloads without blowing up cost, security, or reputations. In that context, an evaluation artifact like the “12-criteria checklist for enterprise AI automation platforms” emerged as a template for how large organizations now buy AI.

The checklist takes an inherently messy decision – choosing among platforms like Kore.ai, Vellum AI, Microsoft Power Automate, AWS Bedrock, Google Vertex AI, Celigo, and others – and compresses it into twelve standardized dimensions. Each dimension is quantified on a 1-10 scale. Buyers are told that scores above 90/120 indicate “production readiness” for Fortune 1000 deployments and that proof must come from demos, SLAs, and third-party audits.

The criteria span the full stack: end-to-end lifecycle management, multi-agent orchestration at 10,000+ concurrent sessions, governance and observability, model and cloud agnosticism, 1,000+ prebuilt connectors, low-code plus SDKs, RAG and tools, security and privacy controls, hard performance numbers (P99 latency under one second), pricing transparency, vendor ecosystem maturity, and time-to-value (POC in under a week, production in under a month).

Each item is paired with concrete verification moves (“simulate 1,000 sessions/hour,” “run 10,000 inferences,” “build a multi-step agent in 30 minutes”) and explicit red flags (lock-in to a single cloud, lack of drift detection, no public benchmarks). The result is a playbook that turns a political, technical, and strategic decision into a defensible spreadsheet – one that procurement, security, and engineering can all read the same way.

THE PATTERN: When Procurement Becomes The Primary Interface To AI

The 12-criteria checklist is not simply a convenient buyer tool; it is a visible instance of a deeper structural shift: enterprise AI is increasingly mediated not through builders or researchers, but through procurement and governance apparatus. As the category matures and the downside risk of failures grows, organizations pull AI into the same gravitational field that already shaped ERP, CRM, and cloud infrastructure buying.

That gravitational pull does several things at once. First, it translates technical uncertainty into standardized, quasi-objective numbers: 10k+ concurrent sessions, 99.99% uptime, P99 < 1 second, 1,000+ connectors, 90/120 readiness thresholds. These figures become the lingua franca between engineering leads, CISOs, CFOs, and legal, allowing decisions to be justified in committee and audited later.

Second, it encourages convergence. Once a set of criteria like “model and cloud agnosticism,” “multi-agent orchestration,” or “no-code plus SDK” are canonized as must-haves, they stop being differentiators and start being table stakes. Vendors from Kore.ai to Vertex AI to Bedrock end up promising roughly the same surface features, competing less on whether they support a capability and more on whose implementation appears safer, cheaper, or more integrated.

Third, it redistributes power. A scoring template that expects 1,000+ connectors, full SOC2/GDPR/HIPAA, and global uptime SLAs structurally favors incumbents and well-capitalized platforms. Startups with novel capabilities but incomplete compliance or limited connector libraries are screened out early, not for lack of value but for failure to map neatly to the rubric. Risk-averse enterprises prefer “good enough and auditable” over “innovative but idiosyncratic.”

Finally, the checklist externalizes expertise. The knowledge of how to evaluate AI automation — model behavior, observability, RAG quality, cost dynamics — is embedded in a portable document that can be copied into RFPs and governance playbooks. This allows organizations with limited in-house AI depth to act like sophisticated buyers. It also catalyzes a cottage industry: analysts, consultants, and tooling vendors all align around the same dimensions, reinforcing them as “the way” to think about enterprise AI platforms.

In short, the pattern is procurement-first institutionalization: AI moves from experimental projects driven by local champions to standardized platforms purchased via templates, and those templates begin to steer the evolution of the platforms themselves.

THE MECHANICS: Metrics, Incentives, And Feedback Loops

Underneath the checklist are overlapping incentive systems that make this structure resilient.

Organizational risk management. Large enterprises face reputational, regulatory, and operational hazards from AI gone wrong — hallucinations in customer service, biased decisions in HR flows, data leaks from misconfigured connectors. A quantified checklist lets leaders demonstrate due diligence: every criterion was evaluated, every red flag considered, audits were reviewed. If a deployment fails, the process, not the individual, can be defended.

Coordination across silos. An AI automation platform touches legal (data usage), security (access, encryption, identity integration), finance (TCO, pricing models), operations (SLAs), and product/IT (integrations, extensibility). The 12-criteria structure maps cleanly onto these stakeholders. Governance and observability speak to security and compliance; connectors and performance speak to operations; pricing and time-to-value speak to finance. A shared rubric functions as a coordination protocol for cross-functional buying committees.

Vendor roadmap alignment. Once buyers start writing “multi-agent orchestration,” “RAG with tools and memory,” or “POC in <1 week, prod in <1 month” into RFPs, vendors quickly reorganize roadmaps to match. Even capabilities that are nascent or rarely used in practice are promoted into product marketing and minimally viable implementations, because missing a checkbox can disqualify a vendor before technical teams even test the product.

This produces a Goodhart-style dynamic: as soon as metrics like 10k+ concurrent sessions or 1,000+ connectors become decision thresholds, suppliers optimize for passing those thresholds, not necessarily for delivering resilient value under the customer’s specific constraints. Load tests are tuned for benchmark scenarios; connectors proliferate even if many are shallow; multi-cloud support is advertised even when only one cloud path is battle-tested.

Consultant and analyst amplification. Analyst firms and systems integrators benefit from repeatable frameworks. A 12-point rubric can be wrapped into paid assessments, maturity models, and transformation roadmaps. They, in turn, publish “top platforms” reports that echo these same dimensions, citing leaders like Kore.ai for governance or Vellum AI for lifecycle tooling. This gives the checklist a kind of soft standard status: it is everywhere, so it must be right.

Tooling reinforcement. Observability providers, evaluation platforms, and AIops tools then productize around exactly the constructs the checklist highlights: eval harnesses to test RAG quality, dashboards for P99 latency, governance consoles for audit trails and RBAC. Once a capability is instrumented and reportable, it becomes easier for buyers to demand it and for vendors to claim compliance, closing a feedback loop between measurement and product shape.

Internal budgeting behavior. Finally, budget gates often require numerical justification. A platform that can be scored 96/120 against a widely recognized rubric is easier to push through capital committees than an unscored alternative, even if the latter is better suited to a specific use case. Numerical comparability displaces nuanced fit as the dominant decision variable, especially in large, highly regulated organizations.

Combined, these mechanisms make the checklist not just descriptive of what matters in AI automation, but constitutive of it: what cannot be scored struggles to be bought, and what cannot be bought at scale struggles to survive as a platform.

THE IMPLICATIONS: Predictable Futures From A 12-Line Table

Understanding this pattern makes several trajectories more predictable.

Convergence and consolidation. As checklists crystallize around similar criteria, the space for wildly different platform designs shrinks. Most contenders will claim end-to-end lifecycle, multi-agent orchestration, RAG, strong governance, and multi-cloud support. Differentiation will tilt toward ecosystem depth (partners, connectors, vertical content) and commercial terms rather than fundamentally new interaction paradigms. This favors vendors already serving hundreds of large customers.

Metric gaming and compliance theater. Because what gets scored gets sold, we can expect sophisticated gaming. Benchmarks will be run under narrow, vendor-friendly conditions; observability features will exist but remain underused; governance dashboards will be present mainly to satisfy audits. Real robustness will vary more than the apparent 1-10 scores suggest.

Standardization and regulation. As these evaluation rubrics propagate into RFPs and internal policies, regulators and industry bodies are likely to lift them into formal guidelines. Criteria like “model and cloud agnosticism,” “bias and drift detection,” or “data residency controls” will migrate from best practice to regulatory expectation, especially in finance, healthcare, and public sector deployments.

Second-order criteria. Once the first wave of checkboxes is normalized, a new layer will emerge around areas the current list only gestures at: incident response for AI failures, supply-chain security for models and datasets, long-term alignment and safety guarantees, and environmental or energy footprints. Future scorecards may extend beyond 12 points, but they will follow the same logic: translate ambiguous societal concerns into measurable, RFP-able numbers.

Most importantly, the rise of templates like the 12-criteria checklist means that the frontier of innovation in enterprise AI will lie partly in escaping or reshaping these structures — either by creating platforms that can be safely evaluated on new dimensions, or by building meta-tools that help organizations see beyond the spreadsheet while still satisfying its institutional demands.

Leave a Reply