The Case

By early 2026, a Fortune 200 services firm evaluated its generative AI rollout for 1,000 internal agents and tens of thousands of customers. The shortlist featured familiar single-provider options—Google Vertex AI, IBM Watson Machine Learning, and Microsoft Copilot Studio—each promising deep integration with existing clouds and productivity suites, 99.9–99.99% SLAs, and outcome-based contracts that blurred raw token charges.

On paper, these all-in-one platforms showed similar total cost of ownership (TCO). Annual fees—including minimum platform commitments and “all-you-can-eat” token tiers—landed in the $500K–$900K range for 1,000 active users. After negotiated discounts, inference costs sat in the $3–10 per 1M-token band. Outcome-based pricing often obscured the true unit economics, making line-item comparisons difficult.

Procurement then added a less conventional contender: a composite stack built on SiliconFlow for inference routing, Kore.ai for orchestration, and Vellum/Gumloop for evaluation. This multi-model approach directed each API call to GPT-4o, Claude 3.5 Sonnet, Gemini 2.0, LLaMA 3.1, or Mixtral based on task complexity, latency targets, and per-model pricing. Pay-as-you-go inference ranged from roughly $0.10–2.00 per 1M tokens across providers, with a blended pilot rate closer to $0.50–1.50. Platform fees fell in the low-thousands per month, not tens of thousands.

In a 60-day pilot, the composite stack handled routine FAQs and translations via Mixtral, escalated ambiguous queries to Claude, and routed sensitive data to private LLaMA instances on Hugging Face. Quality metrics equaled or exceeded single-provider baselines. Illustrative TCO calculations—based on the pilot’s traffic mix assumptions and typical vendor discount tiers—suggested about $250K per year versus $500K–$1M on monolithic platforms. That implied a 40–60% TCO reduction and a unified audit trail that eased compliance.

The firm’s final architecture married multi-model routing for general workloads with a scaled-down Vertex AI footprint for deeply Google-centric tasks. Yet the real debate had shifted: it was no longer just “Which model is best?” but “Which layer controls routing, lock-in, and economics?”

The Pattern

The 2026 showdown between multi-model routers and single-vendor stacks reveals a fundamental structural shift. Power in enterprise AI is migrating away from frontier‐model creators toward intermediary routing layers that arbitrate between models and enterprises.

Early foundation model providers—Anthropic, OpenAI, Google, Mistral, Meta—appeared destined to be the ultimate gatekeepers. They controlled the scarce asset (frontier models) and extended influence through enterprise platforms offering SLAs, data pipelines, monitoring, and fine-tuning. Yet these vertically integrated stacks typically priced throughput at 2–3× the open-market rate for equivalent performance.

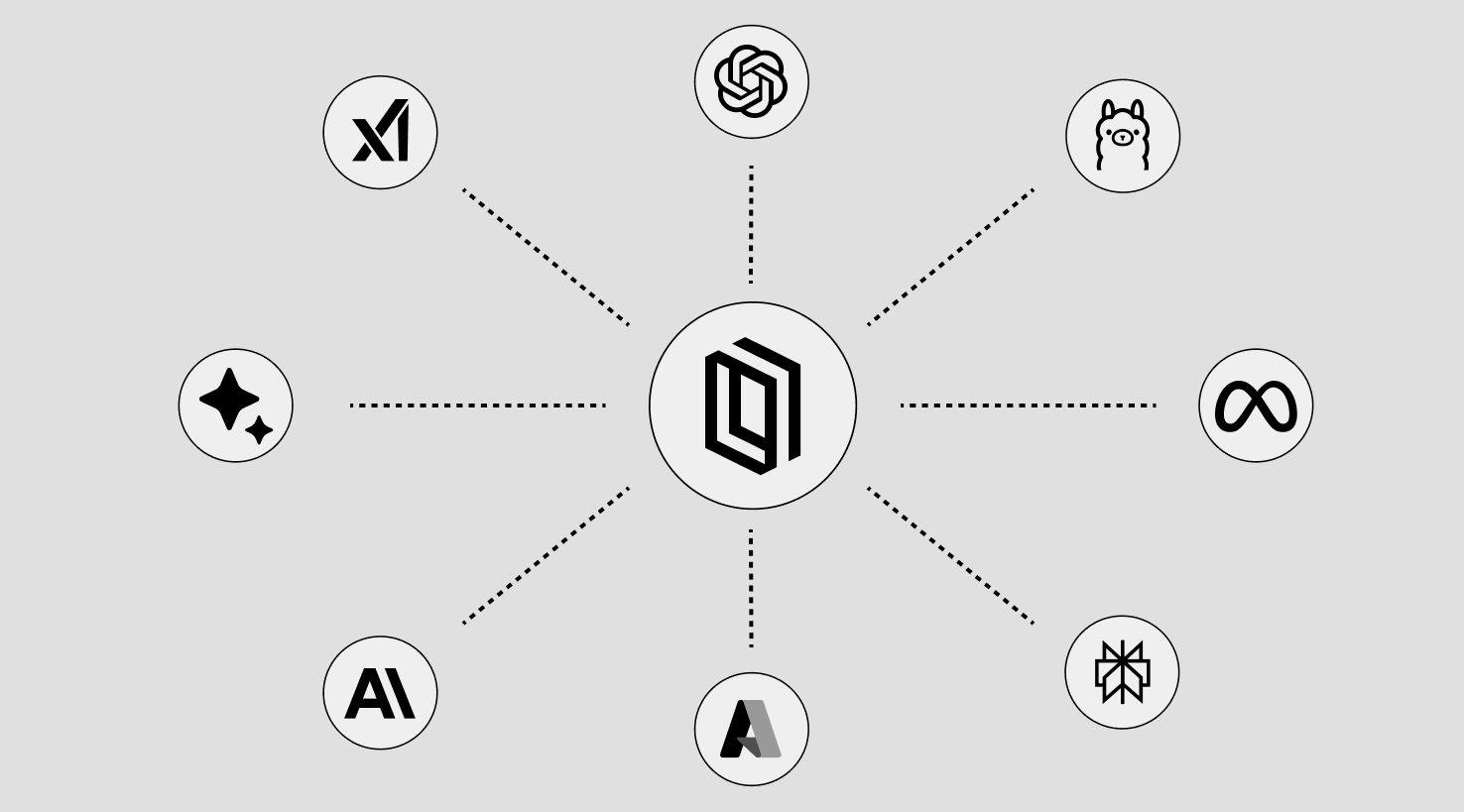

In contrast, multi-model platforms such as SiliconFlow, Kore.ai, Vellum AI, Gumloop, Parallel AI, and Hugging Face Enterprise place their structural bet on model plurality and enterprise flexibility. They standardize prompts, unify observability and security, and abstract over differences among GPT-4o, Claude 3.5 Sonnet, Gemini 2.0, LLaMA 3.1, Mixtral, and dozens of variants. Once that abstraction is in place, tokens become economically interchangeable.

In practice, a given request can hit any model deemed “good enough” against a defined quality threshold, with the router choosing the lowest-cost provider that meets it. Observed inference pricing in pilot bids fell in the $0.10–2.00 per 1M-token range, compared to the $3–10 band typically embedded in single-vendor proposals.

This intermediary-driven dynamic mirrors other industries. Online travel agencies commoditized flights and hotels, reducing supplier pricing power. Payment gateways aggregated banks behind unified APIs. CDNs sat between content producers and ISPs. In each case, intermediaries that control routing and aggregation captured margin growth while core producers became interchangeable, even if technically differentiated.

Within AI, market shares also fragment under this pressure. Anthropic may hold roughly 40% share in safety-focused reasoning segments, according to some estimates, while Google may claim about 21% in data-integrated AI workloads. Yet as routing layers normalize cross-provider arbitration, the crucial question becomes: which layer maintains the enterprise relationship and controls spend?

Single-vendor stacks respond by doubling down on ecosystem lock-in—Gemini embedded in Google Workspace, Copilot woven into Microsoft 365, Watson anchored in regulated data estates. These players lean on deep integration to justify paying 2–3× for equivalent throughput as a trade-off for one-throat accountability and tighter SLAs. Multi-model vendors meanwhile sell neutral substrates that keep models and clouds interchangeable under a single control plane for governance and spend.

Viewed this way, the true contest is not Claude versus GPT-4o or Gemini versus LLaMA. It is between vertically integrated platforms internalizing routing and horizontally oriented meta-platforms commoditizing model supply.

The Mechanics

The multi-model advantage rests on three interlocking mechanisms: price arbitrage, risk diversification, and governance consolidation.

Price arbitrage. With connections to dozens of model providers, routers treat each request as a micro-auction. Simple classification or summarization might run on a Mistral or Mixtral open model at $0.10–0.50 per 1M tokens. Complex reasoning calls can be sent to Claude or GPT-4o at $1–3 per 1M. Over millions of calls, blended rates observed in pilots hovered around $0.50–1.50 per 1M tokens, versus the $3–10 per 1M tokens common in single-vendor enterprise deals.

For example, a 1 M token/day workload—roughly equating to a team of customer-facing AI agents—could route 70% of traffic to Mixtral at about $0.10 per 1M tokens and 30% to Claude at $1.50. At scale, that yields an effective monthly cost in the $200 range versus around $1,200 under typical single-provider tiered pricing. Those illustrative calculations assume the pilot’s traffic mix, model-selection percentages, and negotiated discount assumptions. Projected annually for 1,000 seats, this drives the familiar 40–60% TCO gap.

Routers profit via a thin margin on volume, incentivizing investments in live-traffic A/B testing. Tools like Gumloop’s evaluators and Kore.ai’s performance monitors compare models continuously and adjust routing to uphold quality while minimizing spend.

Risk diversification. Outages, rate limits, or policy shifts from any single vendor can disrupt a vertically integrated stack. A router-based architecture treats such failures as simple rerouting events. If Gemini experiences downtime, traffic can shift to Claude or LLaMA in under a second. If data-use terms tighten for healthcare, sensitive workloads can pivot to on-prem or VPC-hosted open models without a wholesale replatform.

This diversification self-reinforces: each high-profile outage pushes more enterprises toward intermediary routers, which in turn gather richer performance data to sharpen arbitrage strategies, further entrenching their advantage.

Governance consolidation. Multiple model providers suggest many audit trails, but leading routers package unified RBAC, policy enforcement, and logging across all underlying models. Hybrid deployment patterns (e.g., Hugging Face Enterprise, IBM-style on-prem options) let regulated customers retain sensitive data in VPCs or private infrastructure. The router logs “who accessed what, when, and via which model,” streamlining GDPR, HIPAA, and SOC 2 audits compared to stitching diverse provider logs. Negotiations for regional data residency or FedRAMP accreditation likewise consolidate around the orchestration contract rather than dozens of individual provider agreements.

Single-provider platforms counter with their own ecosystem security primitives—Vertex applies Google Cloud IAM and BigQuery lineage, Copilot inherits Azure AD and M365 controls, Watson leverages its enterprise governance pedigree. However, deeper integration often trades away price flexibility and routing freedom, shifting risk from model operations to economic lock-in.

Over time, a second-order effect emerges: routing-layer lock-in. Once an organization encodes agents, evaluators, and cross-session memory into Kore.ai or SiliconFlow, switching routers becomes nontrivial—even if swapping underlying models is technically straightforward. The locus of dependency moves from model vendors to meta-platforms, but the structural logic of platform power remains intact.

The Implications

This pattern offers a diagnostic lens on the next phase of enterprise AI. Routing layers that deliver 40–60% TCO reductions and automatic failover across providers are poised to become default entry points for new deployments. Single-provider platforms will persist where ecosystem depth—M365 productivity flows, Google-centric data estates, or industry-specific Watson tooling—justifies paying a 2–3× premium and accepting tighter lock-in.

Enterprises now face a migration of lock-in rather than its elimination. Committing to a single-vendor stack centralizes bargaining power with that provider. Committing to a multi-model router distributes risk across multiple model vendors but consolidates dependency on the intermediary orchestration layer. Ultimately, the routing layer that controls model selection, spend, and governance becomes the new arbiter of platform power in AI.

Leave a Reply