What Changed and Why It Matters

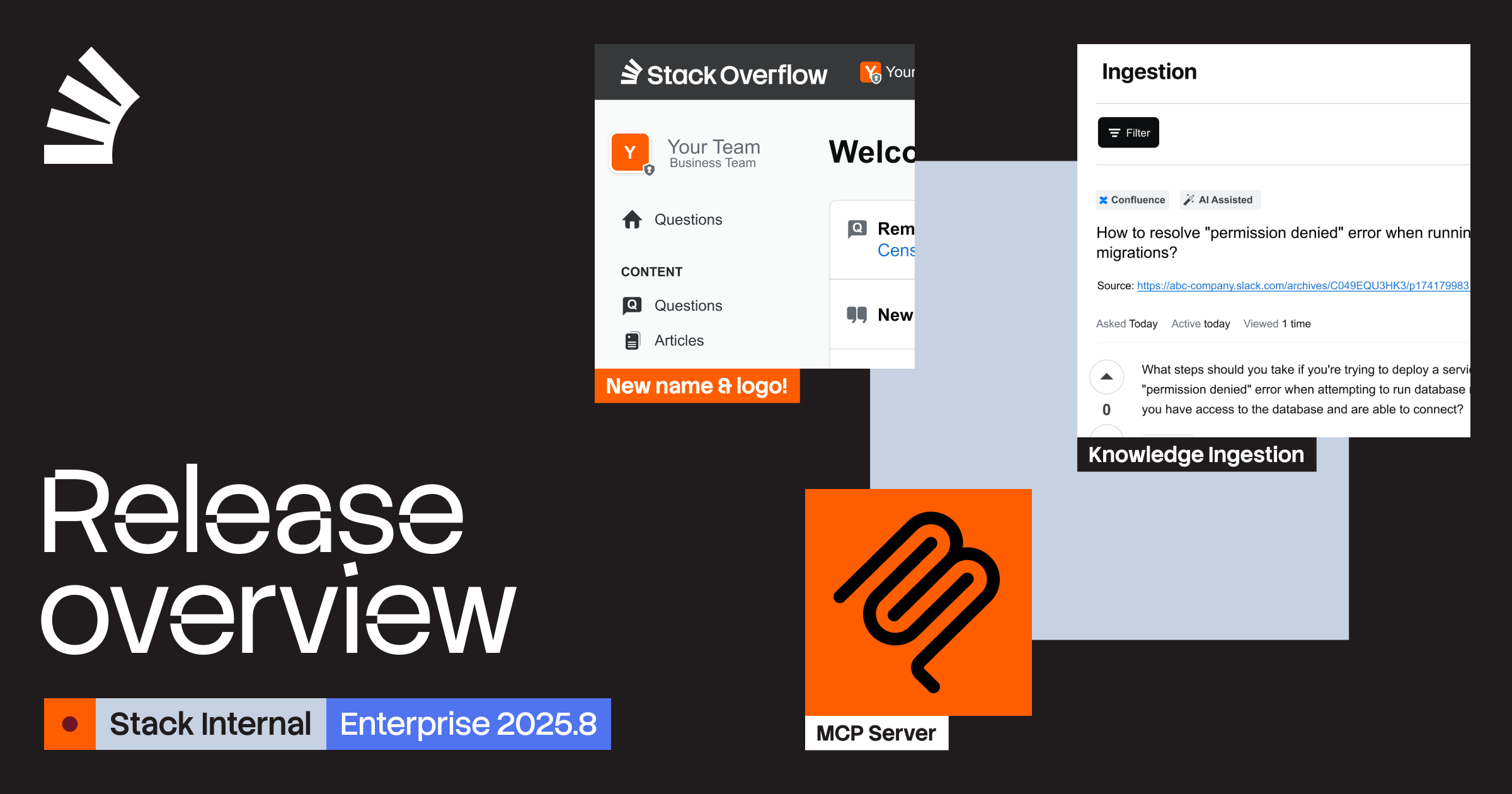

Stack Overflow launched “Stack Overflow Internal,” a secure, enterprise version of its Q&A platform designed to feed internal AI assistants. The release adds Model Context Protocol (MCP) server integration, admin controls, metadata exports, reliability scores, and tagging-turning hard-won developer knowledge into machine-readable context for copilots and agents. For operators, the impact is practical: safer, auditable AI answers grounded in company-specific documentation rather than generic web data.

This matters because enterprises aren’t struggling to stand up models-they’re struggling to ground them. By packaging curated knowledge, governance, and an open-protocol bridge (MCP) into a deployable product, Stack Overflow is positioning itself as a “knowledge intelligence layer” that shortens time-to-value for AI assistants while tightening compliance and traceability.

Key Takeaways

- Stack Overflow Internal connects verified internal Q&A to AI agents via an MCP server, enabling cited, auditable responses.

- Reliability scores, tagging, and metadata exports make knowledge machine-readable for retrieval and training pipelines.

- On-prem and hybrid options address data residency and governance; admin controls, RBAC, and audit logs are built in.

- Knowledge ingestion and validation workflows reduce the time from scattered docs to AI-ready context.

- Good fit for AI copilots/agents at scale; overlap with Confluence/SharePoint/Notion needs careful consolidation planning.

Breaking Down the Announcement

At the core is an MCP server that lets agentic tools request, retrieve, and cite internal knowledge from Stack Overflow Internal. Stack says this powers grounded responses in tools like GitHub Copilot, ChatGPT, and Cursor; in practice, confirm client-side support in your environment, as MCP adoption varies by tool. The server can run inside your infrastructure to avoid data egress, a priority for regulated teams.

Metadata exports and structured tagging make approved content accessible to retrieval pipelines-think routing Q&A to a vector store or feature store alongside provenance, ownership, and reliability scores. Those scores, plus moderation workflows, are designed to gate what agents can quote, and to prioritize canonical answers in retrieval. Stack also flagged a knowledge-graph roadmap, aiming to encode relationships (services, teams, repos, runbooks) for higher-precision retrieval and disambiguation.

Knowledge ingestion targets a common blocker: scattered institutional memory across wikis, Slack, tickets, and email. The product supports seeding historical content, validating quality, and publishing to a consistent schema so that AI assistants have one source to ground against. Bi-directional flows let agents suggest updates, but changes still route through human review—critical to prevent model-amplified misinformation.

Market Context: How It Compares

Stack Overflow Internal sits between legacy knowledge tools and AI infrastructure. Compared with Confluence, Notion, or SharePoint, the differentiation is AI-first design: citation-forward retrieval, reliability gating, and an open-protocol (MCP) bridge for agents. Versus enterprise search (Elasticsearch, Algolia, Glean), Stack emphasizes curated, answer-shaped content and developer workflow integrations (IDEs, chat, agents) over broad document search.

It’s complementary to model providers (OpenAI, Anthropic) and Microsoft’s Copilot ecosystem, supplying the “ground truth” that makes assistants useful inside enterprises. Emerging agent platforms like Moveworks look more like distribution partners than direct substitutes. The risk for buyers is not capability overlap—it’s integration sprawl across wikis, search, and assistants if consolidation isn’t intentional.

Governance, Risks, and the Real Work

- Knowledge quality debt: Ingesting noisy Slack threads or stale wikis will degrade agent accuracy. Use reliability scoring and editorial workflows before allowing content into retrieval indexes.

- Agent write-back risk: Bi-directional updates are powerful but dangerous. Keep human-in-the-loop approvals, require citations, and log diffs for audit.

- Stack overlap and lock-in: If Confluence/SharePoint remain active sources, mandate a source-of-truth policy. Favor open protocols (MCP) and exports to avoid hard lock-in.

- Compliance posture: On-prem or private VPC for the MCP server mitigates data egress; confirm audit logging, RBAC scope, and data retention against internal policies.

- Client support reality: MCP is gaining traction, but support varies. Validate integrations with the exact agent clients your teams use.

Operator’s Playbook: How to Pilot

- Start narrow: Pick one high-cost domain (e.g., on-call runbooks or CI/CD failures) and seed Stack Overflow Internal with curated, owner-verified content.

- Turn on reliability gates: Require citations in agent responses; only surface content above a reliability threshold and with active owners.

- Integrate via MCP: Connect the MCP server to your primary assistant (Copilot, ChatGPT, or internal agent). Keep the server inside your network first.

- Instrument outcomes: Track answer accuracy, resolution time, ticket deflection, and escalation rate. Compare against a control group over 60-90 days.

- Design the write-back workflow: Allow agent-suggested edits, but route through code-review-like approvals with SLAs and audit logs.

- Plan consolidation: Decide what lives in Stack Overflow Internal versus Confluence/SharePoint; publish a migration and deprecation plan to avoid duplication.

- Stress-test exports: Validate that metadata exports feed your retrieval stack (vector DB, feature store) without schema drift or permission leaks.

Bottom Line

Stack Overflow is no longer just a public Q&A site; it’s positioning as an enterprise knowledge layer for AI assistants. If you’re serious about grounding copilots with cited, auditable answers, this is worth a pilot—especially if you require on-prem control and open-protocol connectivity. Just don’t skip the editorial and consolidation work; the technology will only be as accurate as the knowledge and governance you put behind it.

Leave a Reply