Therapists’ Hidden ChatGPT Use: A Trust, Compliance, and Brand Risk for Healthcare

A reported wave of therapists quietly consulting ChatGPT during sessions-sometimes live on-screen-has turned AI hype into a real compliance crisis. Beyond ethics, undisclosed AI use can breach privacy rules, professional standards, and emerging state laws. Providers and telehealth platforms that act now can convert risk into a trust advantage; those that don’t face regulatory scrutiny, lawsuits, and patient churn.

Executive Summary

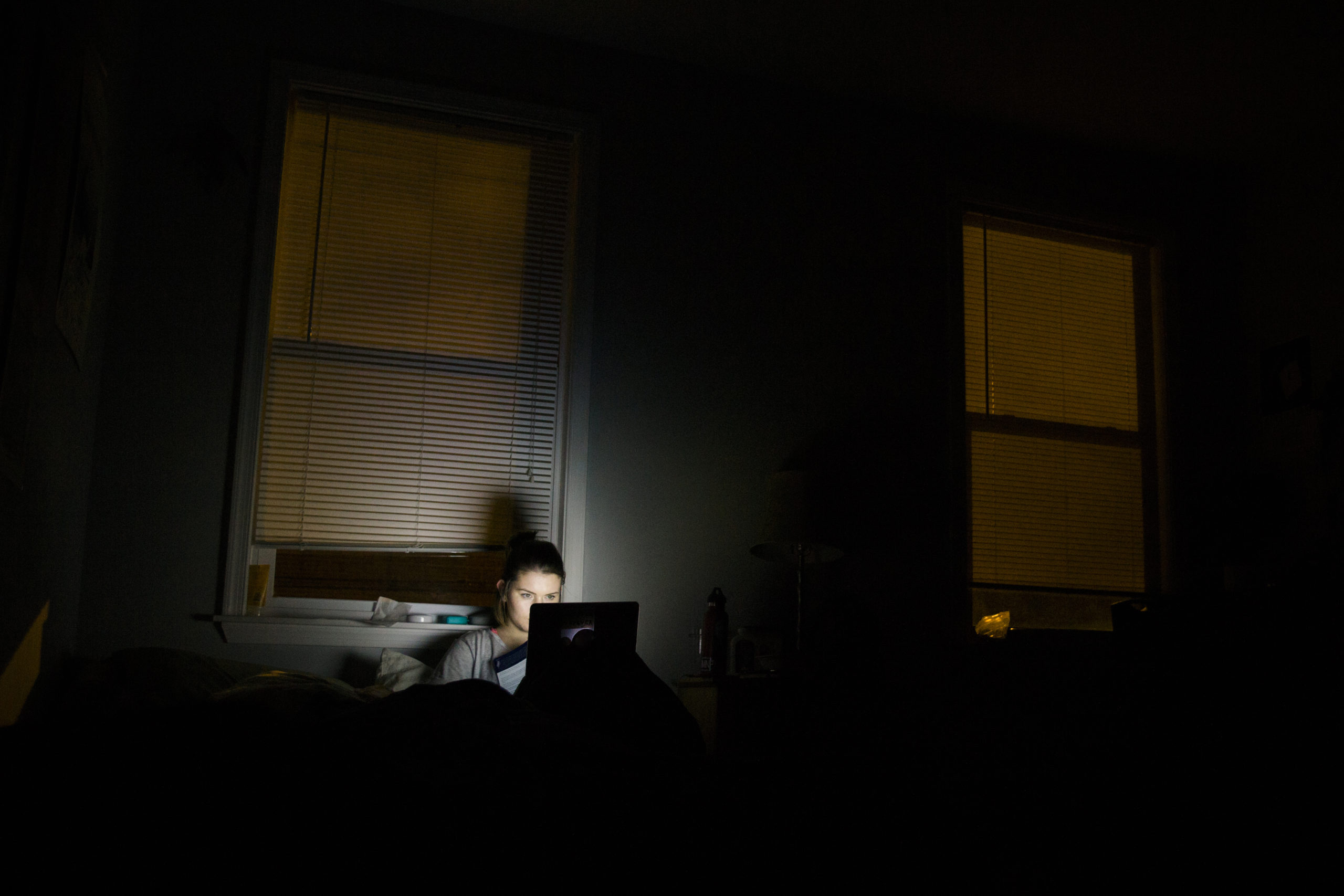

- Trust is the new moat: Undisclosed AI use in therapy undermines the therapeutic alliance and invites complaints to licensing boards, malpractice claims, and reputational damage.

- Regulatory momentum is real: Professional bodies caution against AI diagnosis; states like Nevada and Illinois already limit AI in therapeutic decision-making, with more likely to follow.

- Governance is differentiating: Clear disclosure, consent, and HIPAA-aligned AI workflows can become a marketable quality signal and payer-friendly compliance asset.

Market Context: The Competitive Landscape Is Shifting to “Transparent AI Care”

The line between documentation assistants and clinical decision-making tools is blurring. General-purpose models (e.g., consumer chatbots) are being informally used for notes and even suggested replies-without disclosure—creating “shadow AI” inside care delivery. Professional associations advise against AI-based diagnosis, and new state laws prohibit AI in therapeutic decision-making. Expect payers and malpractice insurers to ask for proof of AI governance. Telehealth brands, EHR-integrated AI scribes, and group practices that operationalize compliant, transparent AI will set the standard and capture trust-sensitive demand.

Opportunity Analysis: From Liability to Leadership

Used responsibly, vetted AI can streamline documentation and deliver standardized, manualized support (e.g., CBT content) when explicitly designed and clinically validated for that purpose. The opportunity is to formalize “AI-with-consent” models: disclose tools, limit use to documentation or patient education, ensure no PHI flows to consumer systems, and maintain human-in-the-loop care. Providers that codify this now can negotiate better payer terms, meet insurer expectations, and market a patient “AI Bill of Rights” (transparency, opt-out, human override) as a brand differentiator.

Action Items: Immediate Steps for Strategic Advantage

- Institute an AI usage freeze on consumer tools for PHI until approved: disable personal accounts, block unvetted apps, and route clinicians to sanctioned solutions.

- Create an AI inventory and risk register: document who uses what, for which purpose (documentation vs decision support), data flows, and model providers.

- Adopt a disclosure and consent policy: update informed consent forms, patient-facing FAQs, and session disclosures that specify if/when AI tools are used.

- Choose HIPAA-aligned, enterprise-grade tools: require BAAs, data segregation, no training on client data, configurable retention, and audit logs (seek SOC 2/HITRUST/ISO 27001).

- Define red lines: prohibit AI for diagnosis or treatment decisions unless the tool is purpose-built, clinically validated, and permitted under state law.

- Integrate with the EHR: prefer embedded AI scribes with on-label documentation support, minimizing copy/paste and data leakage risks.

- Train clinicians: prompt hygiene, PHI minimization, recognizing hallucinations, and documenting human oversight in the record.

- Stand up governance: cross-functional AI committee (clinical, compliance, legal, security) to review tools, monitor drift, and handle incidents.

- Prepare for audits: maintain vendor due diligence files, DPIAs/PIAs, model usage logs, and patient consent records; align with payer/insurer requirements.

- Proactively communicate: publish an “AI in Care” statement and offer patient opt-outs—turn transparency into a competitive trust signal.

Bottom line: Secret AI in therapy is a reputational time bomb. Make transparent, compliant AI part of your care model now—or risk losing patients, payers, and regulators’ confidence later.

Leave a Reply